From SQL to Slack: Automating Data Workflows with Big Functions

Every data analyst knows the feeling: you've uncovered an important insight, but turning that finding into action requires an engineering ticket, multiple meetings, and weeks of waiting. What if you could write a SQL query and have it automatically notify your team on Slack? Or enrich your customer data with third-party information without building a complex pipeline?

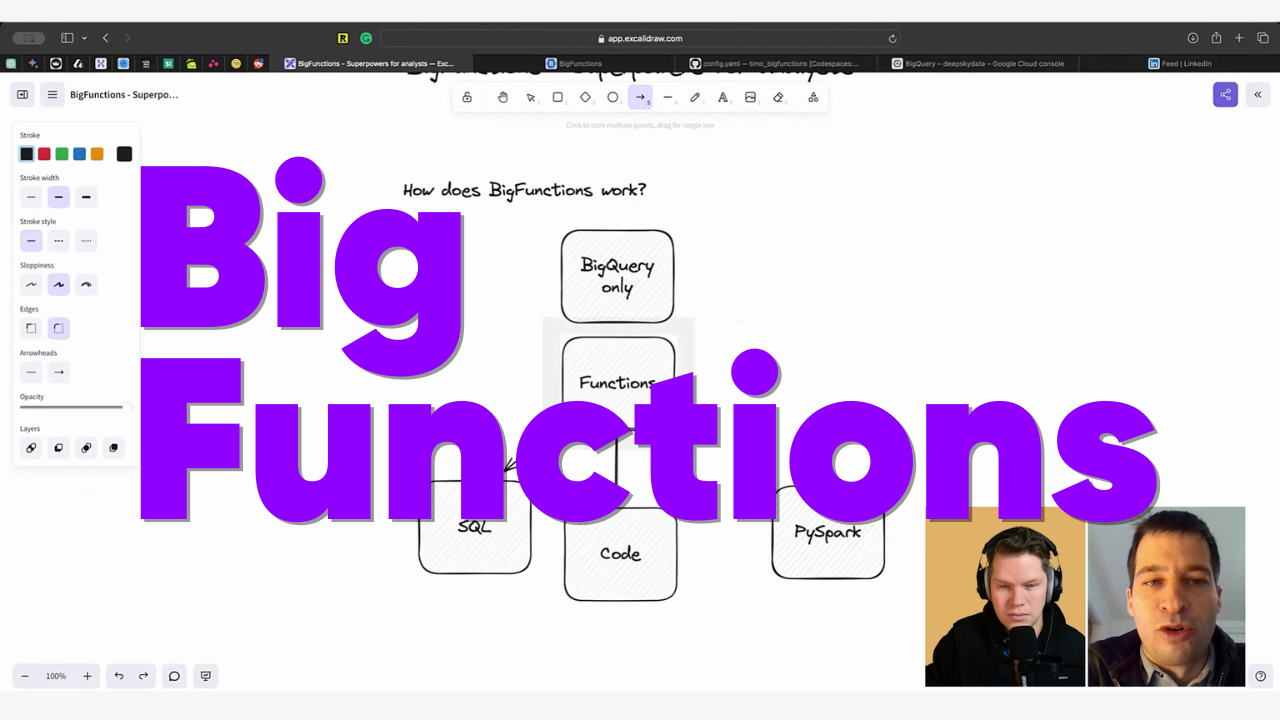

BigFunctions transforms BigQuery from a data warehouse into an automation engine, letting analysts trigger actions directly from their SQL queries. In just five minutes, you can deploy functions that connect your data to external services - no infrastructure management is required.

This isn't just about saving engineering time. It's about empowering analysts to complete the full cycle of data work, from insight to action, without dependencies. Let's explore how BigFunctions is bridging this gap and improve how data teams deliver value.

Why BigFunctions Bridges the Gap Between Data Analysis and Action

Data teams face a constant challenge: turning insights into action. While analysts excel at uncovering valuable patterns in data through SQL, implementing these findings often requires engineering support. A simple task like automatically sharing key metrics via Slack can turn into a week-long project, requiring infrastructure setup, API integration, and deployment processes. This creates bottlenecks and delays the delivery of valuable insights to stakeholders.

BigFunctions fundamentally changes this dynamic by allowing analysts to trigger actions directly from SQL queries. Instead of building and maintaining separate infrastructure for each integration, analysts can leverage pre-built functions or create custom ones that connect directly to external services. This removes the traditional dependency on engineering teams for implementation while maintaining security and scalability.

The applications are immediately practical. Analysts can push daily metrics to Slack channels, enrich customer data with third-party APIs, or standardize complex calculations across the organization - all from within their SQL workflows. For example, a single query can analyze product usage patterns and automatically notify relevant teams when specific thresholds are met, a process that previously required multiple systems and team handoffs.

This capability shift represents more than just technical convenience; it's about empowering analysts to complete the full cycle of data work. By bridging the gap between analysis and action, Big Functions enables faster decision-making and more agile data operations. Teams can experiment with new metrics and automation without lengthy implementation cycles, leading to more innovative uses of their data infrastructure.

Here is a 40m long introduction and hands-on demo I recorded with Paul, the creator of the BigFunction framework:

From 5-Day Projects to 5-Minute Solutions: Real-World Big Functions Examples

Slack Notifications Pipeline (5-minute implementation)

Most data teams have experienced this scenario: stakeholders want regular updates about key metrics, but setting up automated notifications traditionally requires multiple components - a scheduled script, API integration, error handling, and monitoring. What could be a simple notification often becomes a multi-day engineering project.

Enter BigFunctions. Here's how a weekly course popularity notification goes from concept to production in five minutes:

- Write a simple SQL query to get your metrics:

SELECT course_name, COUNT(*) as starts

FROM course_starts

WHERE start_date >= DATE_SUB(CURRENT_DATE(), INTERVAL 7 DAY)

GROUP BY course_name

ORDER BY starts DESC

LIMIT 1- Add the Big Functions Slack integration:

SELECT bigfunctions.eu.send_slack_message(

'Most popular course this week: ' || course_name || ' with ' || CAST(starts as STRING) || ' new students',

'your-webhook-url'

)

FROM (previous query)- Schedule the query in BigQuery's native interface.

That's it - no infrastructure to maintain, no API credentials to rotate, no deployment pipeline to manage. The same approach that would typically require multiple services and ongoing maintenance now runs as a simple scheduled query.

The impact extends beyond time savings. Analysts can now experiment with different metrics and notification patterns without engineering support. When a team wants to track a new KPI, it's a matter of minutes to implement the notification, fostering a more agile, data-informed culture.

Data Enrichment Without Engineering (30-minute setup)

Understanding website traffic sources often requires context beyond basic referral URLs. Traditional approaches to enriching this data might involve building ETL pipelines or maintaining separate services. BigFunctions transforms this into a straightforward SQL operation.

Here's a practical implementation for enriching referral data:

SELECT

referrer_url,

bigfunctions.eu.get_webpage_metadata(referrer_url) as metadata

FROM website_traffic

WHERE DATE(visit_timestamp) = CURRENT_DATE()The function returns structured metadata including site descriptions, titles, and languages - valuable context for marketing analysis. To make this cost-effective and scalable:

- Process new URLs only:

SELECT referrer_url

FROM website_traffic t

LEFT JOIN referral_metadata m USING(referrer_url)

WHERE m.referrer_url IS NULL- Add quota monitoring:

SELECT COUNT(*) as daily_enrichment_count

INTO monitoring.api_usage

FROM website_traffic

WHERE DATE(visit_timestamp) = CURRENT_DATE()This approach transforms what would typically be a single data pipeline architecture into a simple SQL workflow. Marketing teams can immediately access enriched data for analysis, while data teams maintain control over API usage and costs through standard SQL patterns.

The same pattern extends to other enrichment sources - company information APIs, weather data, or AI-powered text analysis, all accessible through SQL queries rather than separate infrastructure.

AI-Powered Analysis Integration (1-hour project)

App store reviews contain valuable customer feedback, but manually analyzing thousands of comments is impractical. Big Functions enables automated sentiment analysis and categorization directly in BigQuery using AI models.

Here's the implementation approach:

SELECT

review_text,

bigfunctions.eu.sentiment_score(review_text) as sentiment_score,

-- A higher score indicates more positive sentiment, while a lower score indicates more negative sentiment

bigfunctions.eu.ask_ai(

'''

Question: About which main feature is this review about?

Answer: return one value from these options: user interface, pricing, performance

'''

, 'gemini-pro') as category

FROM app_store_reviews

WHERE DATE(review_date) = CURRENT_DATE()To ensure reliable results:

- Run analysis in batches of 1000 reviews

- Store results in a separate table for cost efficiency

- Add validation checks for AI outputs

This transforms unstructured feedback into actionable data that product teams can immediately use. The entire pipeline, from raw reviews to categorized insights, runs within BigQuery without additional infrastructure.

Key benefits:

- Immediate access to AI capabilities

- Consistent analysis across all reviews

- Easy integration with existing dashboards and reports

Getting Started: Implementing Big Functions in Your Data Stack

Initial Setup (5 minutes)

Setting up Big Functions requires minimal configuration. First, clone the repository and install the CLI:

pip install bigfunctionsCreate your first function:

From the docs:

"Functions are defined as yaml files under bigfunctions folder. To create your first function locally, the easiest is to download an existing yaml file of unytics/bigfunctions Github repo.

For instance to download is_email_valid.yaml into bigfunctions folder, do:"

bigfun get is_email_validDeploy the function

Make sure to check all requirements in the docs.

bigfun deploy is_email_validThe function becomes available in your specified dataset within minutes, ready to use in queries. This minimal setup provides immediate access to both pre-built functions and the framework for custom development.

Integration with Existing Workflows

Big Functions seamlessly integrates with existing dbt workflows by adding function calls directly to your models. This allows you to:

- Add notification logic to your presentation layer models

- Enrich data as part of your transformation pipeline

- Maintain version control of function usage

- Document function dependencies alongside models

Beyond dbt, BigFunctions works with any tool that generates BigQuery SQL, including:

- Scheduled queries in BigQuery

- BI tools (when they don't cache the datasets)

- Custom data applications

Best practices for integration:

- Store credentials securely using your existing patterns

- Monitor usage through standard BigQuery logging

- Include function tests in your CI/CD pipeline

- Document function dependencies for team visibility

The key advantage is maintaining your existing workflow while adding powerful integration capabilities without additional infrastructure.

BigFunctions represents an interesting shift in how data teams can deliver value. By eliminating the gap between engineering, analysis and action, it enables analysts to build powerful data workflows directly in BigQuery without engineering support.

From sending automated Slack notifications to enriching data with AI insights, what once took days of engineering work now requires just a few lines of SQL. The simplicity of setup combined with seamless integration into existing tools like dbt makes it an accessible solution for teams of any size.

As data teams continue to face pressure to deliver insights faster, BigFunctions offers a practical path to more agile, automated data operations. Whether you're looking to streamline communications, enrich your data, or experiment with AI integrations, BigFunctions provides the tools to transform your BigQuery instance into a comprehensive data enablement platform.

Start with a simple Slack notification - you might be surprised how quickly your team discovers new ways to bridge the gap between insight and action.

Join the newsletter

Get bi-weekly insights on analytics, event data, and metric frameworks.