How to refactor your tracking design?

Imagine the not-so-unlikely situation of starting a new job as an analyst, where behavioral data analysis (aka marketing & product analytics) is essential. This involves tracking activities on the marketing website and within the product, and numerous questions quickly arise. People want to understand aspects like the onboarding process and the effectiveness of recently released features.

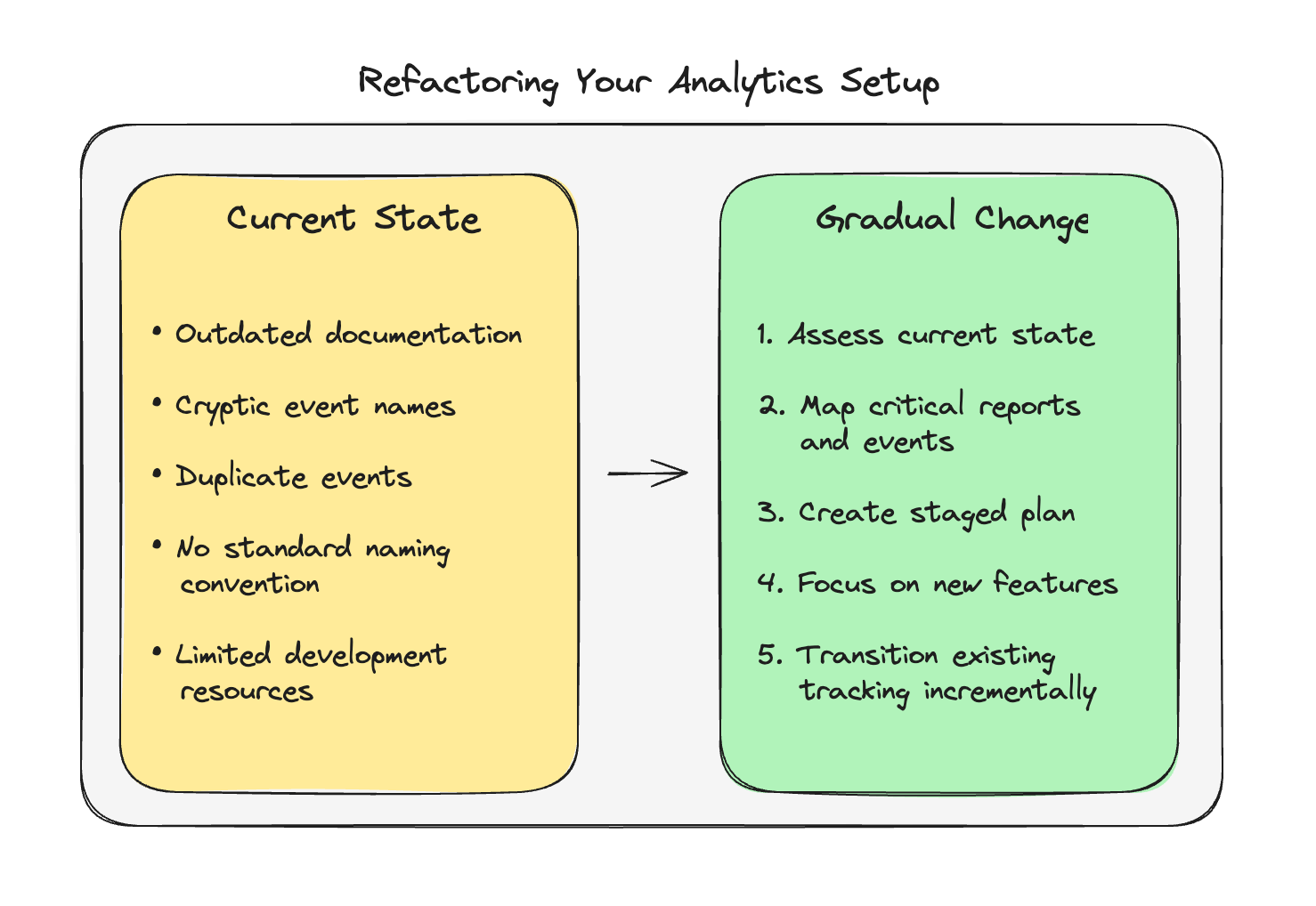

As a new employee, you're highly motivated. However, when you begin to investigate the data, you discover it's not in good shape. The documentation is outdated, and when reviewing the analytics data, only one or two events make sense. Many events appear cryptic or duplicated.

Given your experience, creating a new structure is not a big thing. You develop a two-week plan that includes:

- Conducting interviews

- Performing event storming sessions with marketing, product, and sales teams

- Mapping customer and user journeys

- Creating an event data design to address emerging questions

This approach is inspired by a lovely book on event data design. Everything seems promising until you discuss the plan with the CTO during lunch.

When you explain that you've created a new design that just needs implementation, the CTO is surprised. With sprints already planned for the next four to five months and limited resources for tracking implementation, your ambitious plan faces significant challenges ("We did a whole sprint with tracking implementation some months ago, that's it what we can do").

The reality is that discussing better tracking design is easy. Talking and sketching ideas on a whiteboard costs nothing. While it's valuable to understand the ideal state, you must also confront practical limitations.

You're facing two big problems. First, how do you get the resources to implement anything? Second, what do you do with all the existing events while you transition? Let's focus on how to actually refactor your tracking setup with these constraints in mind.

Let me show you four different strategies for tracking event refactoring.

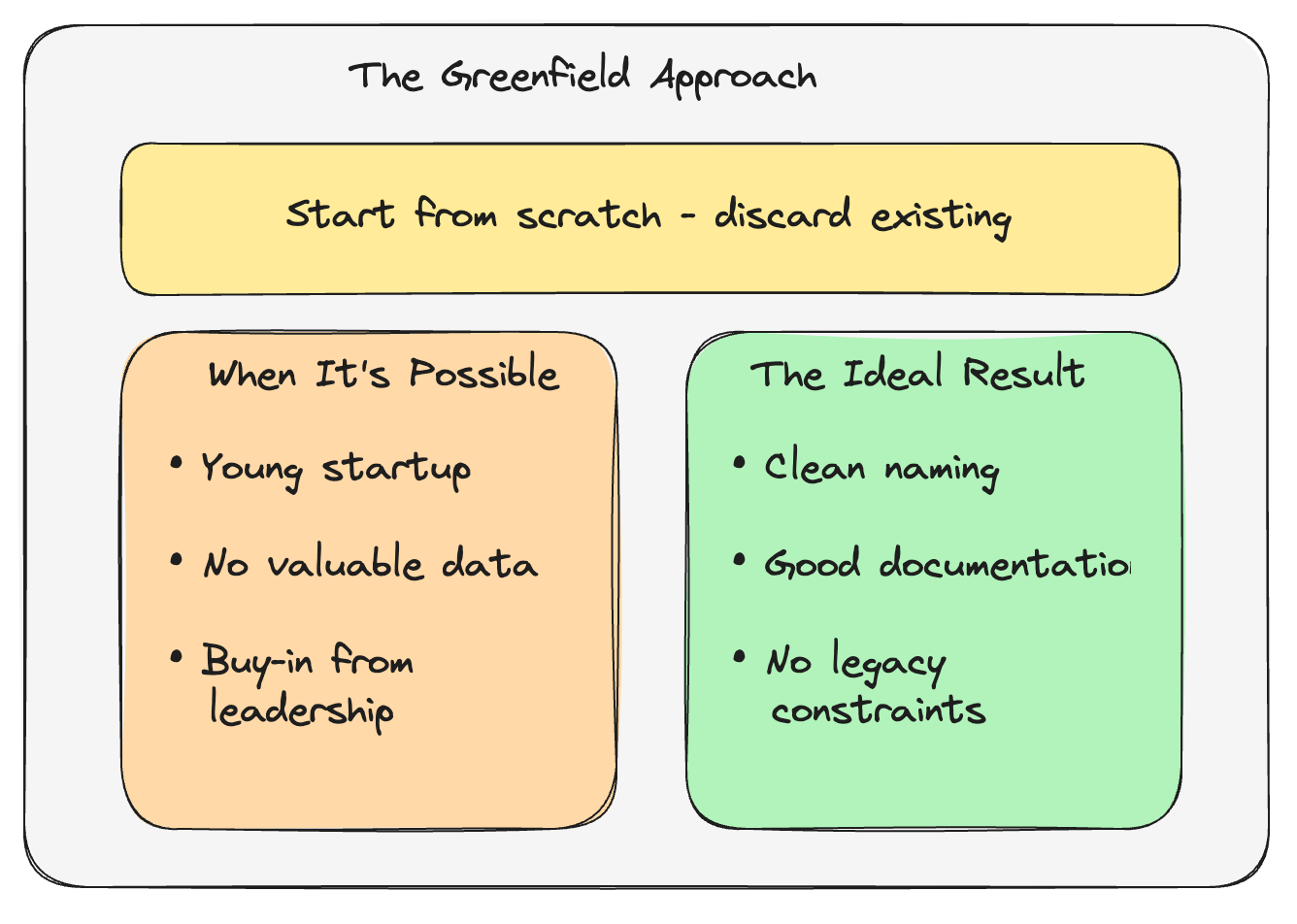

Strategy 1: The Greenfield

The Greenfield strategy is quite a rare approach, typically occurring in specific circumstances. It's most common when working with young organizations or startups that already have a temporary setup in place.

In this scenario, the organization is aware and comfortable with the understanding that their current system will be replaced. This allows for a complete rebuild from scratch, which involves several radical steps.

First, you'll need to throw away existing systems entirely. This means removing all tracking code, eliminating integrations, and shutting down the current analytics tools you're using.

Next, you'll shut down or remove all previously collected data. This can be difficult psychologically for organizations that have been collecting data for any length of time, but it's necessary for a true fresh start.

Finally, you'll start entirely anew with proper planning, documentation, and implementation. This gives you the opportunity to avoid all the mistakes and technical debt that accumulated in the previous system.

The Greenfield strategy often emerges from a place of desperation, usually after multiple unsuccessful attempts to make the existing system work. It becomes viable in several specific situations.

When the previous setup lacks real value, there's little reason to maintain it. If nobody is using the dashboards or the insights aren't driving decisions, the cost of starting over is minimal.

If the data is questionable or unusable due to implementation errors or poor design, continuing to build on this foundation only extends the problems.

The beauty of Greenfield is the freedom it gives you. No legacy constraints means you can implement current best practices from day one. You can design your event taxonomy properly, set up clear naming conventions, and build documentation that actually makes sense. But it is like a unicorn.

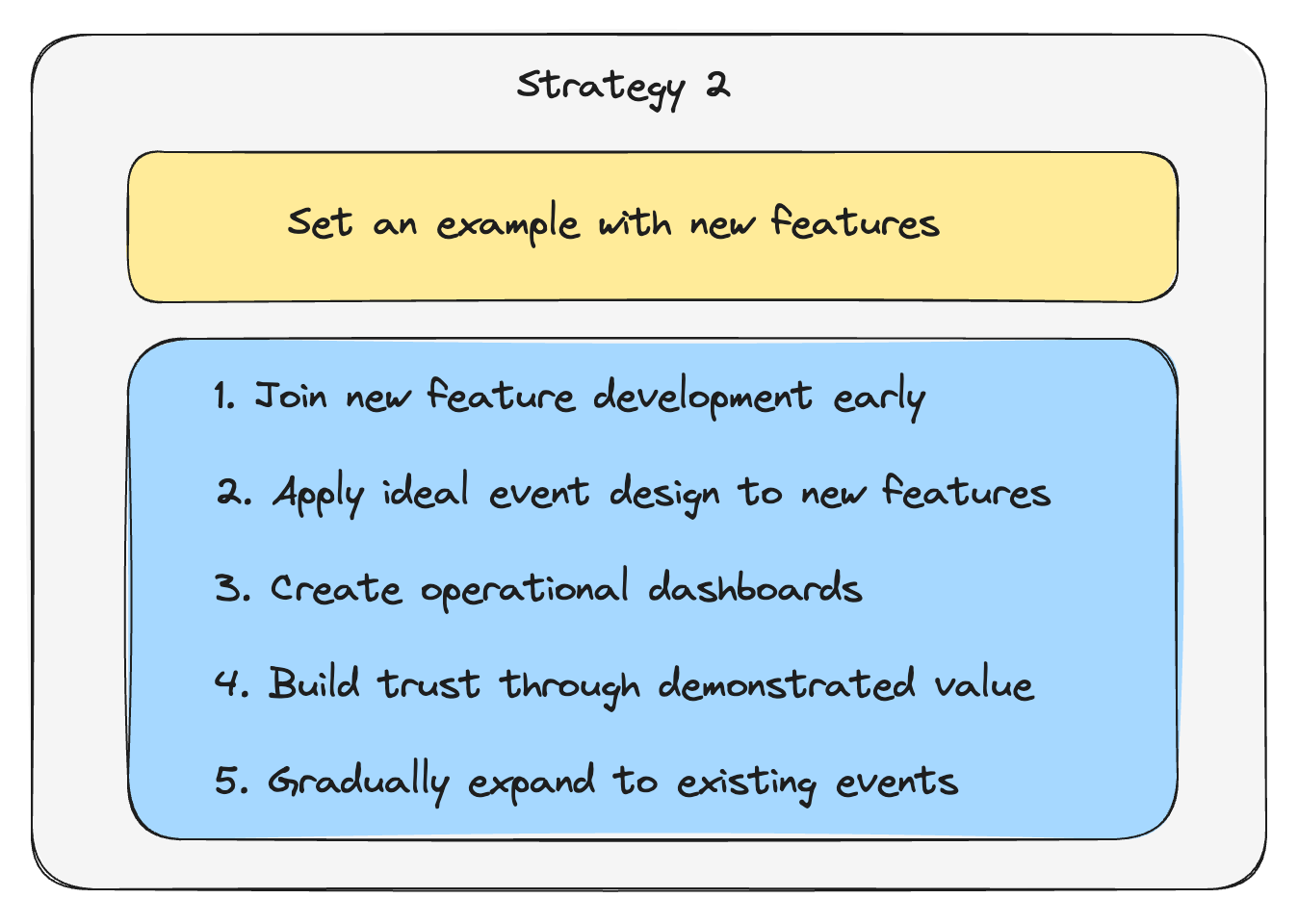

Strategy 2: New features first

When facing reality, we cannot simply build everything from scratch. Most of the time, there is already something in place that cannot be completely discarded. Core reports use existing data with a few events, and people have become accustomed to this information over time.

You cannot abruptly remove everything and tell people that their previous metrics were wrong. Such a confrontational approach is unproductive and not worth pursuing.

You might call this the "golden handcuffs" of analytics - you're locked into a system that's deeply flawed, but it's also deeply embedded in your organization's decision-making processes. Breaking free requires finesse, not force.

When you can't replace existing systems, there are two core strategies. The first strategy is to create a good new example of how things could look by doing it for new things.

When the product team is developing new features, you have an opportunity to implement an improved event data structure. By doing this, you set an example of how good event data design can look.

But most people won't immediately recognize the benefits of a better naming convention or clever property usage. The real value of your new event data design will become visible when you create feature reports and analyses. Get involved early in the process, design events, and create feature dashboards before launch. Do an excellent job from event data design through feature reporting, analysis, and recommendations.

If you execute this approach well, people will be impressed by the new analytics approach. You'll build trust, and when stakeholders become uncertain about existing reporting, they'll be more open to your proposed changes. The benefit of this method is that you can add new features without adapting existing analytics tools, unless you want to reuse or slightly modify old events.

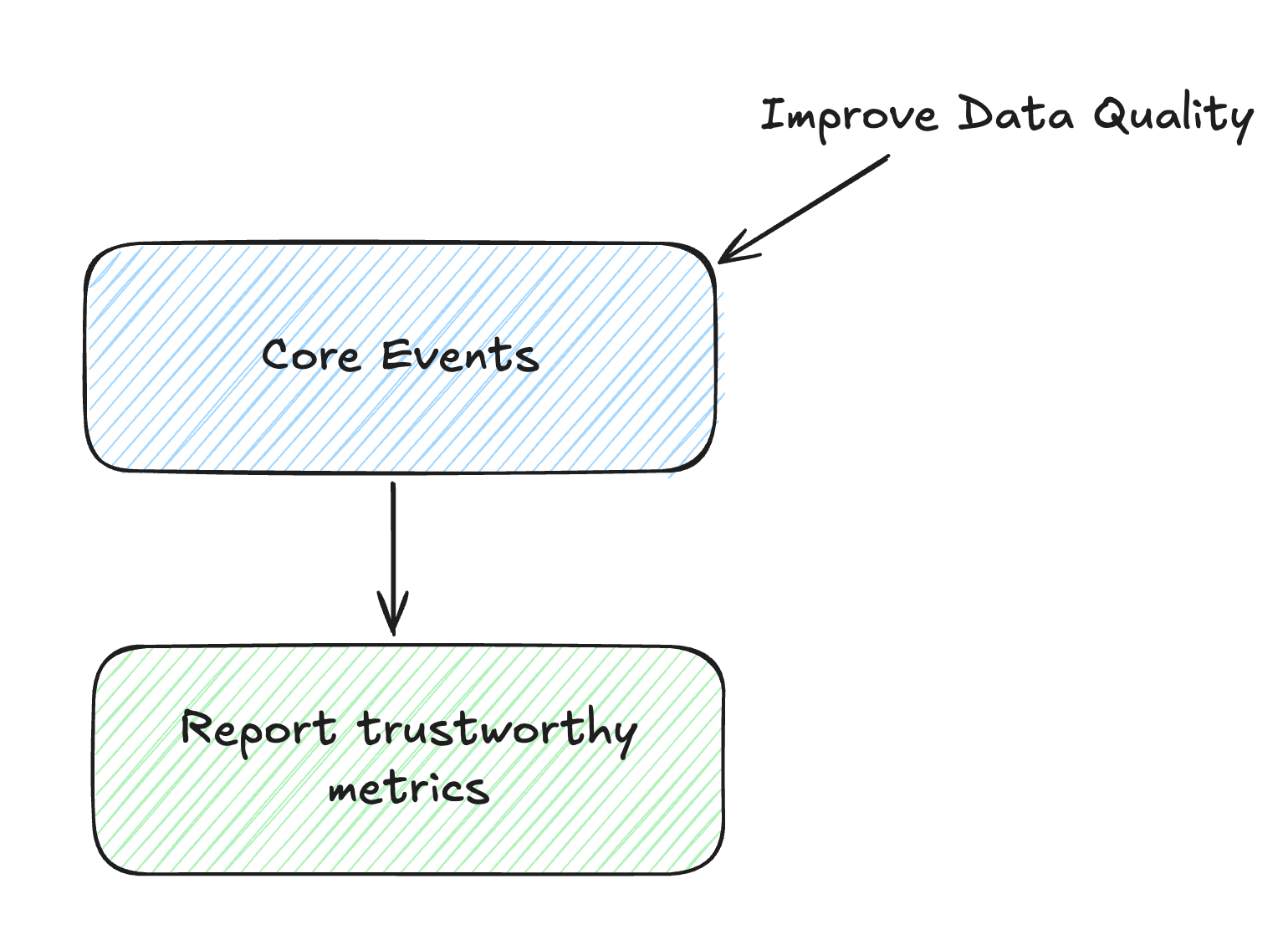

Strategy 3: Core events renovation

You also can start by focusing on core events as a strategic starting point. If you enter a new setup and notice that existing metrics are not trusted, this presents an opportunity to re-implement key events with best practices.

Consider selecting four to five core events and applying rigorous implementation techniques. For example, you might move these events to the server side to immediately improve data quality and eliminate potential browser-related data drops (this is also a strong argument to get resources for this).

The implementation is straightforward, but managing event name changes can be tricky. Different analytics tools handle event naming and changes differently.

The old Google Analytics had significant limitations with event renaming. You couldn't just change names without losing historical data, forcing many teams to maintain outdated naming conventions far longer than they should have (some analytics platforms still have this issue).

GA4 now allows event renaming, which is a welcome improvement. This gives you more flexibility to clean up your taxonomy while preserving your historical data.

Platforms like Amplitude, Posthog and Mixpanel offer more flexible options for handling event transitions. You can rename the display of existing events without changing the underlying data. You can also merge old and new event names to maintain reporting continuity, which is extremely valuable during a transition.

When changing event names, you should first check your analytics tool's capabilities to understand what's possible. Then verify if event name changes or merging are supported in your specific implementation. Finally, ensure that your approach maintains long-term reporting consistency so stakeholders don't lose access to historical trends.

This approach allows you to gradually improve your analytics implementation while maintaining historical data integrity. It's like renovating a house room by room while still living in it - more challenging than building from scratch, but often the only practical option.

This can also be combined with strategy two.

Strategy 4: The Data Warehouse

When it comes to event data design (or model), I prefer an approach that involves managing most of the event data processing in the data warehouse. With this method, we track only about 5% of events in the front end, sourcing the majority of events from the application database and external services like Stripe.

In this setup, you prepare your entire event data model in the data warehouse and then make it available to analytics platforms such as Amplitude, Mixpanel, or PostHog. Alternatively, you can use tools like Mitzu to visualize event data directly from the warehouse without copying data to another system.

The first major benefit is full control over event definition and naming. You can standardize naming conventions across your entire organization, regardless of where the event originated.

You also gain complete flexibility to rename or remodel events. Changed your mind about your taxonomy? No problem - just update your data model in the warehouse.

Perhaps most powerfully, you can define how long changes are applied retroactively. Want to fix a calculation error that affected the last six months of data? You can do that without losing historical information.

When syncing data back to analytics platforms, it's crucial to understand their sync modes. Mixpanel, for example, supports applying edits and deletions to historical data through its Mirror feature. Amplitude currently supports this only for specific data warehouses. PostHog directly queries your data in your warehouse, so any change will be applied immediately.

This approach is most suitable when you already have a data warehouse and your data team is already pulling information for BI reporting. It's perfect if you want to consolidate data and unlock better data quality by applying consistent transformation rules. It really shines when you aim to combine events from different platforms into a unified view of customer behavior.

I've seen this work particularly well for companies with complex products where user actions span across multiple platforms or services. For instance, a SaaS company might want to analyze how users move between their web app, mobile app, and integration with third-party tools.

While not definitively the future of product analytics, this method is increasingly gaining traction due to its flexibility and enhanced analytical capabilities. The days of siloed event data are numbered, and a warehouse-first approach puts you ahead of the curve.

Let me know if these strategies would work for you when you refactor your event data setup.

Join the newsletter

Get bi-weekly insights on analytics, event data, and metric frameworks.