The business questions scale, but your analytics setup doesn't

When implementing data analytics, there's this tricky inflection point that often catches everyone by surprise. It's that moment when your initial dashboards finally deliver value, people start using them regularly, and then - inevitably - the follow-up questions start rolling in.

How Data Projects Usually Get Started

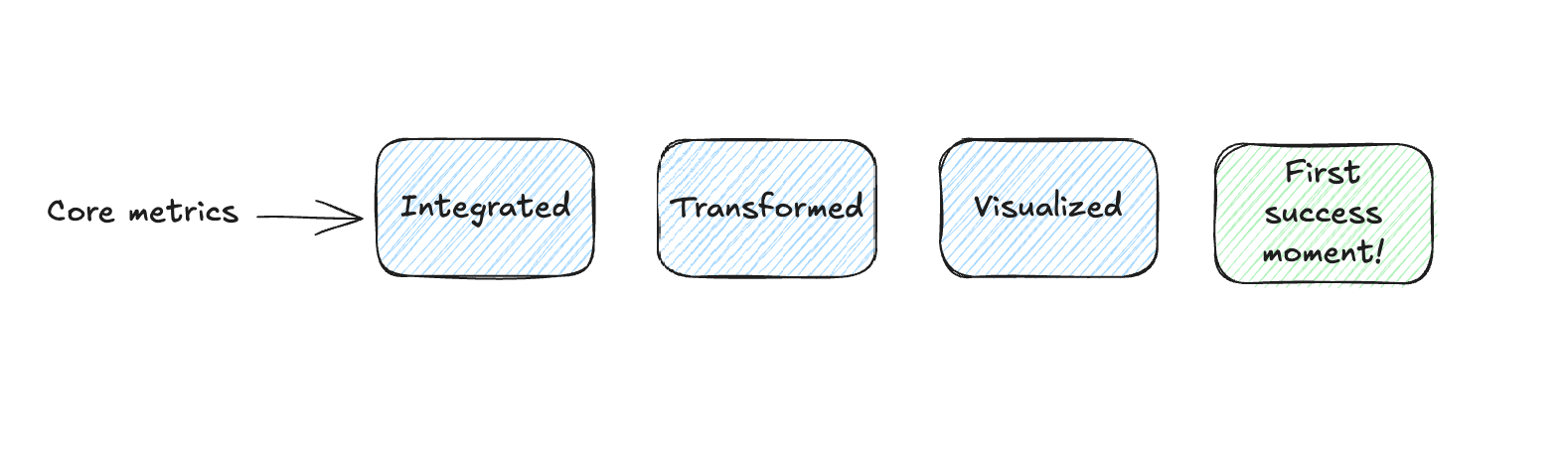

Let's say we start in a very simplified way. First step would be when you have some experience you establish a metric system or build a metric tree. This will cover 10 to 20 core metrics that are important for business.

With this foundation, you take care of data integration. This depends a little bit on where the data is coming from. Some are straightforward - like ad platforms data. And then there's the complicated data integration - any kind of weird systems you have to pull data from.

With data integration in place, you develop your data model. It doesn't really matter what kind of approach you take, but at least you take some data modeling approach. In the end, you'll have data ready for analysis that you can hook up with your analytics or BI tool to present the first insights and metrics in dashboards and reports.

The Crucial Moment

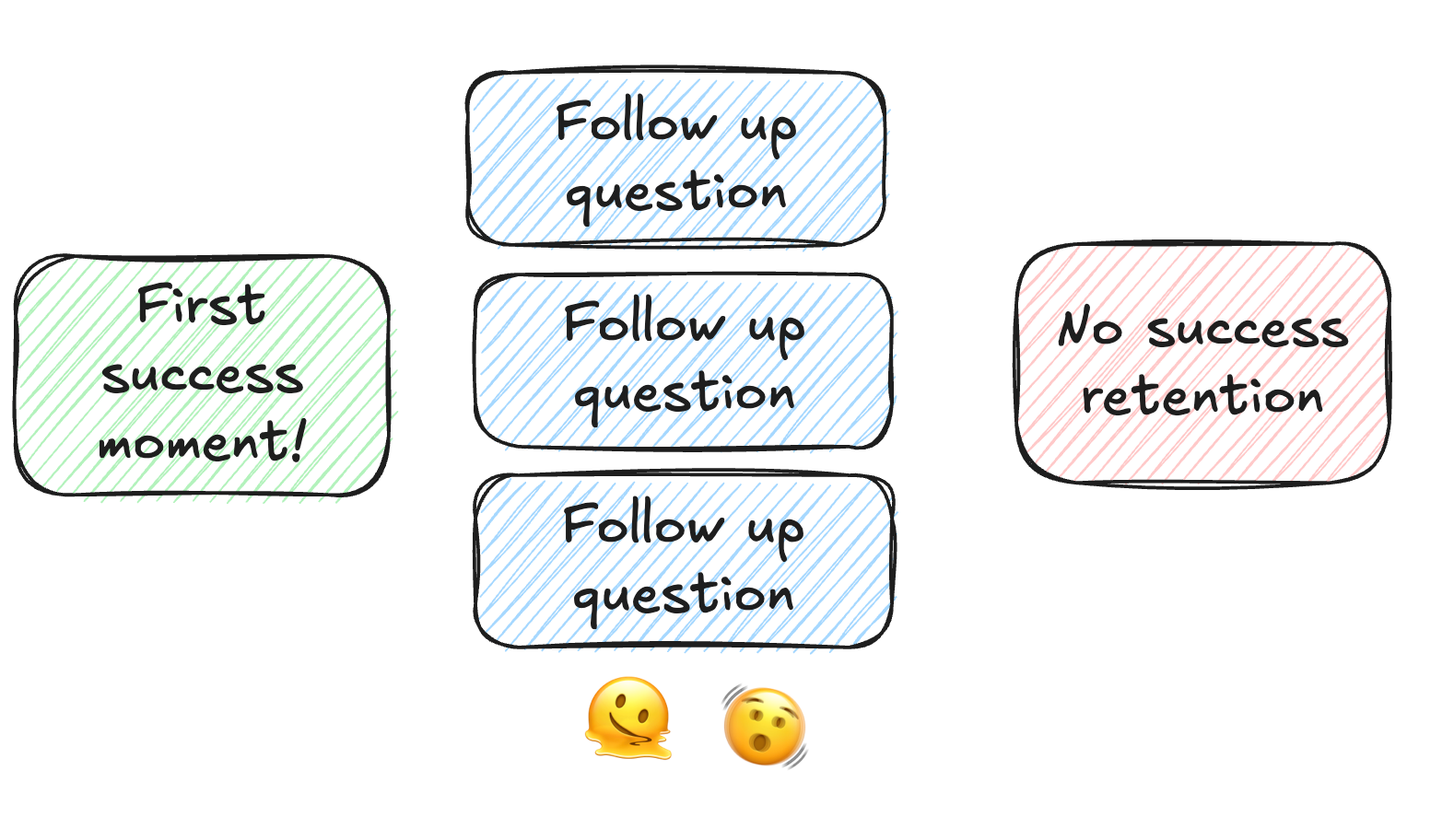

And now comes the crucial part. If you've done this right, the stakeholders that work with the dashboards will start to deep dive into it. They'll use it in their weekly meetings. You've basically handed over a data answer to their important questions.

This is the make-or-break moment. If everything works out, the first set of insights will generate a lot of follow-up questions. The tricky thing with these follow-up questions is they become much more complex - and they become complex easily. Because asking questions is easy to do.

So in the end, we show "okay here is the core conversion funnel" and then someone else says "hmm that's interesting. Can we maybe break down this conversion funnel by x, or y or z?" And the x,y and z will be super plausible.

It could be simply "hey maybe new and returning users" or "users that have been in touch with this kind of campaign" or "can we see this for users that have been in touch with our customer service?"

For every business person, this sounds like the next logical step. And it is! I would ask the same kind of question if I were in this position.

When Things Get Complicated

The problem is, from a data perspective, this can cause extreme headaches.

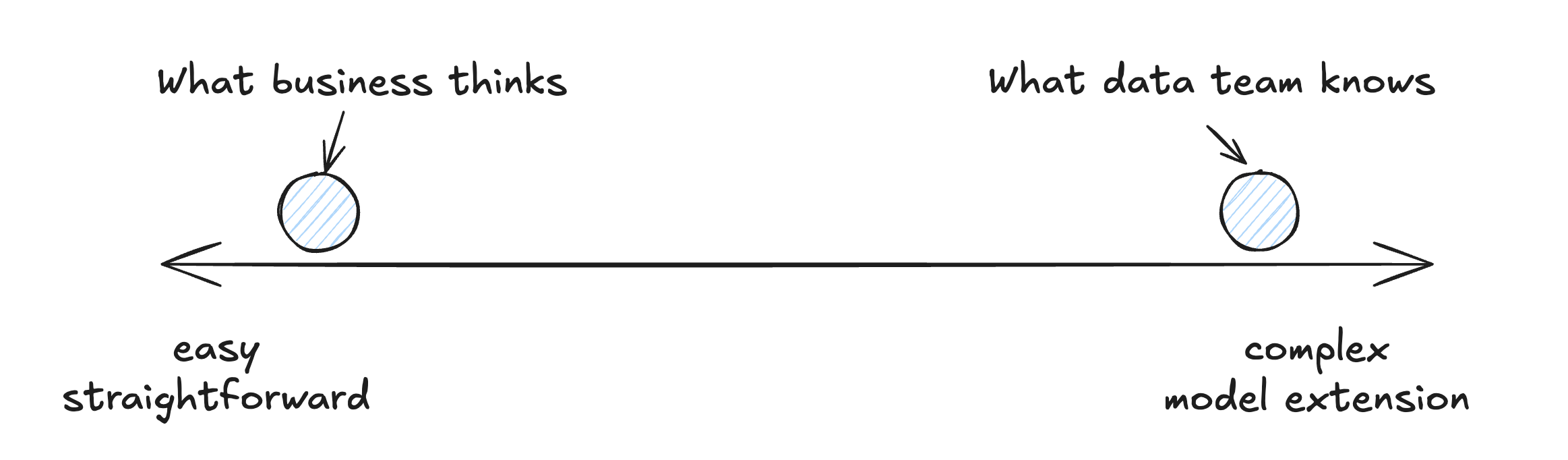

Some breakdowns might be straightforward. Like in the example I used before - breaking down by new versus returning customers might be quite close to your current implementation. But everything else not.

When they ask for the conversion funnel broken down by customer service interaction, we're leaving the realm of just e-commerce data. Now we have to combine it with our behavioral data from the website. And then they'll ask: "Why doesn't this data match up with what we see on the website?" And you'll have to explain the tracking differences.

Every follow-up question opens up new complexity. And I think this is where data projects start to get derailed.

It's scary because you're seeing first adoption. The reason people are asking these follow-up questions is that they get the value of the whole setup. You have first product-market fit with your data stack! The problem is the next version of your product becomes much more complex.

How Do You Handle These Situations?

I don't really have an answer that works 100% because I haven't spent enough time in this area in the last years. But I see two main approaches:

Turn Questions into Business Initiatives

Let's assume implementing a request would take four to six weeks. That's a significant investment. When we treat it like an investment decision, this means when the question is raised, it needs at least a 30-minute or one-hour session with the stakeholder to investigate the purpose and feasibility.

First, let's look at purpose. We just achieved data product-market fit - people are asking questions. We don't want to shut them down immediately by saying "great question, we can't answer, next please." But saying yes and taking six weeks is basically doing the same thing in a passive-aggressive way. We need to be proactive.

When discussing purpose, we need to understand what these people are planning to do with the data. It sounds complicated, but it's not. We say, "We need to understand more about what you're planning to do with this dataset. Does this question come from an observation that makes you believe, for example, that customers keep buying because our customer service is great?"

Maybe their business case is understanding the return on investment for customer service because "our customer service is great, we train people right, we invest time and money, and we want to see indicators that it's paying off."

If you get this context, the whole situation changes. It becomes an important business case - investigating if a significant investment makes sense for the company. In that case, a four-week extension of the model is totally reasonable.

If their answer is "no, it was just an idea, would be interesting to see the impact of customer service" - that's valid but doesn't motivate going deeper. But I wouldn't end there.

I'd ask: "Let's say we figure out people who've been in touch with customer service have a lower CLV, they come back less frequently, buy less. What would you do with that?" The important thing is to see if there's a clear path leading from potential insights to actionable items that could impact the business.

If the business team says, "First, we want to understand if this is the case. Second, we want to make a business case. We want to see how many people come to customer service and don't come back - that's lost revenue. And maybe there's an even higher group who don't even reach out to customer service and just don't come back. We have a problem somewhere, and we want to use this data as a first step to investigate" - then it becomes a business initiative.

This approach prevents us from just producing a dataset that takes time, makes the data model more complex, but then nothing is done with it. When you turn research into a business initiative, you work hand-in-hand to solve a potential business problem.

It's also a great filter mechanism. Many complicated data setups happen because ideas weren't well thought out or contained thinking mistakes. When two or three people think about it together, you might realize the question doesn't change much. Having this open dialogue can channel complex questions in a different way.

Broaden Your Data Modeling Approach

The second option is broadening your data modeling approach. Different applications require different approaches, and you can mix them. There's no one truth that rules them all.

Let me share a specific example. I worked with a company that went through the stage I described. They had covered all the core metrics and insights, and then people started asking different questions - a lot about sequences. "What happened before revenue happened?"

They reached out because these questions were increasing, and they understood their current data model couldn't easily answer them. They saw my work on activity schema and thought it might be an option.

We extended their existing data model - we didn't invent something new, just added a new layer where we created an event stream. With this, they unlocked a new class of questions they could answer about what happened before certain events. They served a new version of their data product to customers who could now get new insights.

Event data modeling is great for answering questions about sequences. You make revenue somewhere and want to know what happened before or after. The "what happened before" can be extremely different - marketing touchpoints (classic attribution question), specific discovery behavior - the sky's the limit.

In my current project, I'm combining both approaches. When I saw questions becoming more complex, I got involved again to understand the motivation. We had built the data model in different ways with an event data model in place, but now I'm creating a new layer on top because the questions call for it. I'm investing about two weeks to extend the model so we can answer this class of questions going forward - not just one question but different similar ones.

Two Key Takeaways

1- Turn data questions into business initiatives when possible. Even when it sounds bold, I've seen great things start this way.

2- Think about your data model like a product. A product doesn't stay the same all the time - you extend features and possibilities. Apply this thinking to your data model as well.

Join the newsletter

Get bi-weekly insights on analytics, event data, and metric frameworks.