The End of Digital Analytics

as we know it.

When Amplitude announced their new chief evangelist some days ago, most people saw a standard hire - congratulations comments galore. I saw something different: a clear signal that digital analytics as we know it is fundamentally over.

This wasn't just any hire. They brought in someone who embodied everything that Google Analytics 4 represented—the old marketing analytics world that digital analytics had been built around for two decades. It's like watching a species evolve in real time, except the original habitat is disappearing. Amplitude is essentially saying "we're the new Google Analytics," but for a world where Google Analytics no longer makes sense.

The choice feels deliberate. Amplitude is absolutely competing with Google Analytics, but they're going after the pro marketers—the ones with serious budgets who need more than GA4's confusing interface and limited capabilities can deliver.

But here's what makes this moment significant: it's not an isolated move. It's the clearest signal yet that the era of digital analytics—the one defined by marketing attribution, "shedding light into dark spaces," and that persistent feeling that maybe we're all participating in an elaborate scam—is over (sorry, that might sound a bit harder than intended)

I should know. I've spent the last two years writing about how product analytics was changing, how attribution was crumbling, and watching my own client base shift from product teams to revenue people asking completely different questions. The Amplitude announcement just confirms what I've been seeing in my projects.

The foundations that held up digital analytics for 20 years are cracking. What comes next is still taking shape.

In this post, I'll break down why digital analytics was always built on shaky ground—promising data-driven decision making but mostly delivering the feeling of being scientific without the actual business impact. I'll show you how the collapse of marketing attribution (the one thing that actually worked) combined with GA4's disaster created the perfect storm that's ending this era.

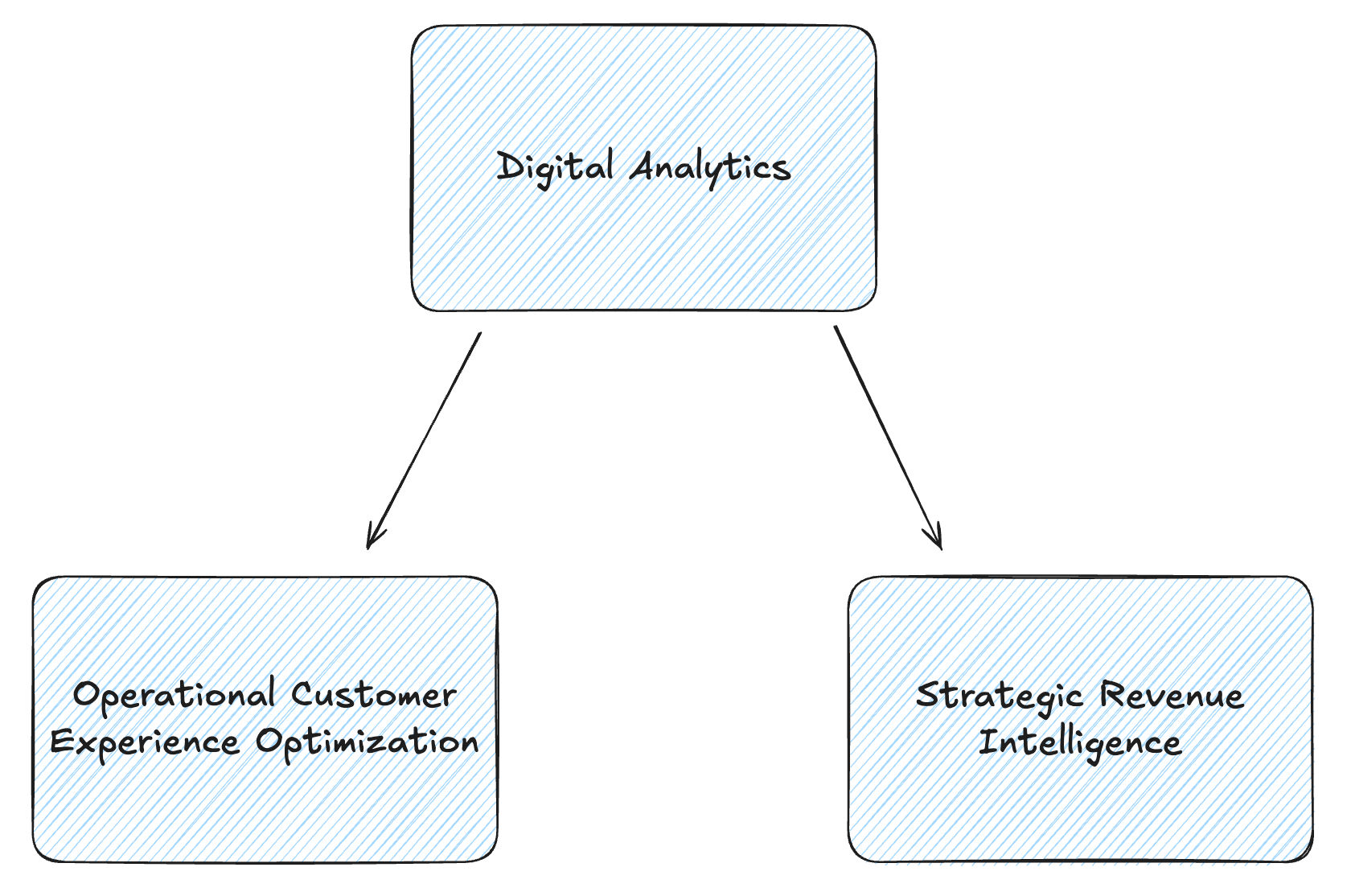

More importantly, I'll walk you through the two distinct paths emerging from this collapse: operational customer experience optimization for teams that need speed over sophistication, and strategic revenue intelligence that finally connects user behavior to business outcomes. Both represent fundamental shifts away from traditional analytics, and understanding them will help you navigate what's coming next.

A quick break before we dive in:

If you like join Juliana's and my new sanctuary for all minds in analytics and growth that love to call out BS and really want to do stuff that works and makes an impact: ALT+ -> we have thoughtful discussions like this one and we run monthly deep dive cohorts to learn together about fundamental and new concepts in growth, strategy and operations.

Head over to https://goalt.plus and join the waitlist - we are opening up by the end of September 2025. And we have limit the initial members to 50 in the first month.

Part I: What Digital Analytics Actually Was

Before I call out the end of something, I need to define what that something actually looked like. Because if we're honest about it, digital analytics was always built on a contradiction that most of us just learned to live with.

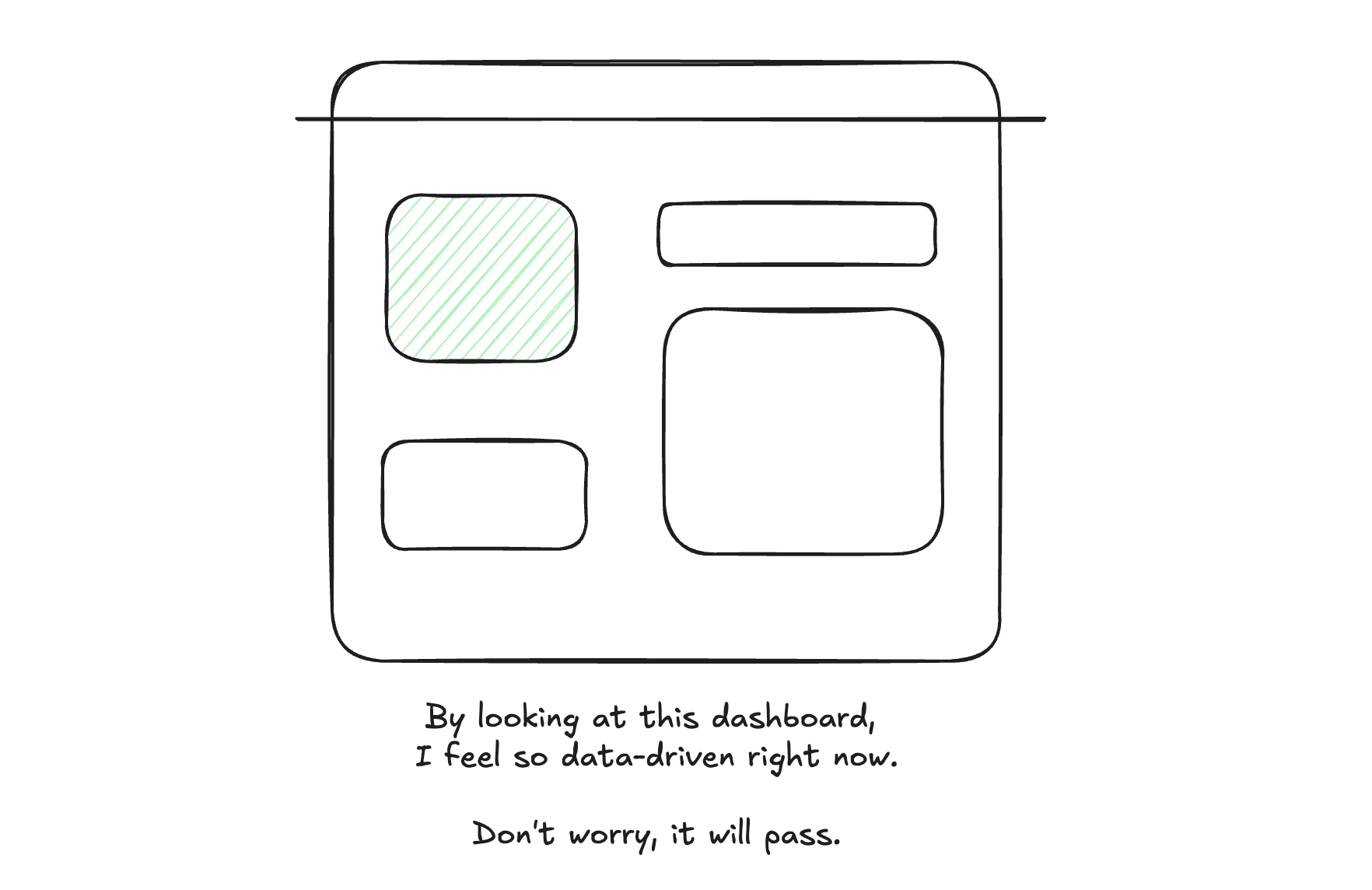

We told ourselves we were doing "data-driven decision making." We built dashboards that showed visits, users, conversion rates. We created elaborate tracking setups, instrumented tag managers (even created a sub-genre for this - the tracking engineer) and celebrated when we could tell you exactly how many people clicked a specific button. But there was always this nagging question underneath it all: what does any of this actually mean for the business?

I felt this contradiction more acutely than most. In every project, I'd set up these sophisticated analytics implementations, deliver insights about user behavior, and watch clients get excited about finally "understanding their users." But I always had this uncomfortable feeling that I should probably mention that most of this data wouldn't actually change much for them. Because knowing that 200 people clicked a button doesn't tell you what to do about it.

That contradiction defined the entire era. And to understand why digital analytics is ending, we need to understand what it really was—not what we pretended it was.

The Promise That was Great at the Time but Never Delivered

Let me take you back to when digital analytics felt revolutionary. At least to me.

Google Analytics launched in 2005, and suddenly you could see exactly what was happening on your website. People were visiting your pages! They were clicking your buttons! You could track their entire journey from the first page view to checkout. For the first time, the black box of user behavior was cracked open.

The promise was intoxicating: build-measure-learn. Eric Ries was preaching the lean startup gospel, and analytics was supposed to be the "measure" part that made everything scientific. You'd launch a feature, measure how people used it, learn from the data, and iterate. No more guessing. No more building things users didn't want. Pure, data-driven decision making. Trust me, I truly believed in that.

Product teams were told that if you weren't measuring, you weren't serious about building great products. Marketing teams were promised they could finally prove ROI and optimize every dollar spent. The analytics industry convinced everyone that the difference between successful companies and failures was whether they had proper tracking in place.

And the tools got more sophisticated. Amplitude and Mixpanel emerged with event tracking that made Google Analytics look like the entry level kid. Now you could track custom events, build complex funnels, analyze cohort retention. You could segment users by any behavior imaginable. The data got richer, the dashboards more beautiful, the possibilities seemingly endless.

But here's what actually happened in practice:

You'd implement comprehensive tracking. You'd build dashboards showing user flows, conversion rates, feature adoption. You'd present insights to stakeholders who would nod appreciatively at the colorful charts. And then... not much would change.

Sure, you'd learn that 15% of users clicked the blue button versus 12% who clicked the red one. But what were you supposed to do with that information? The difference could be noise. It could be meaningful. Most of the time, you couldn't tell.

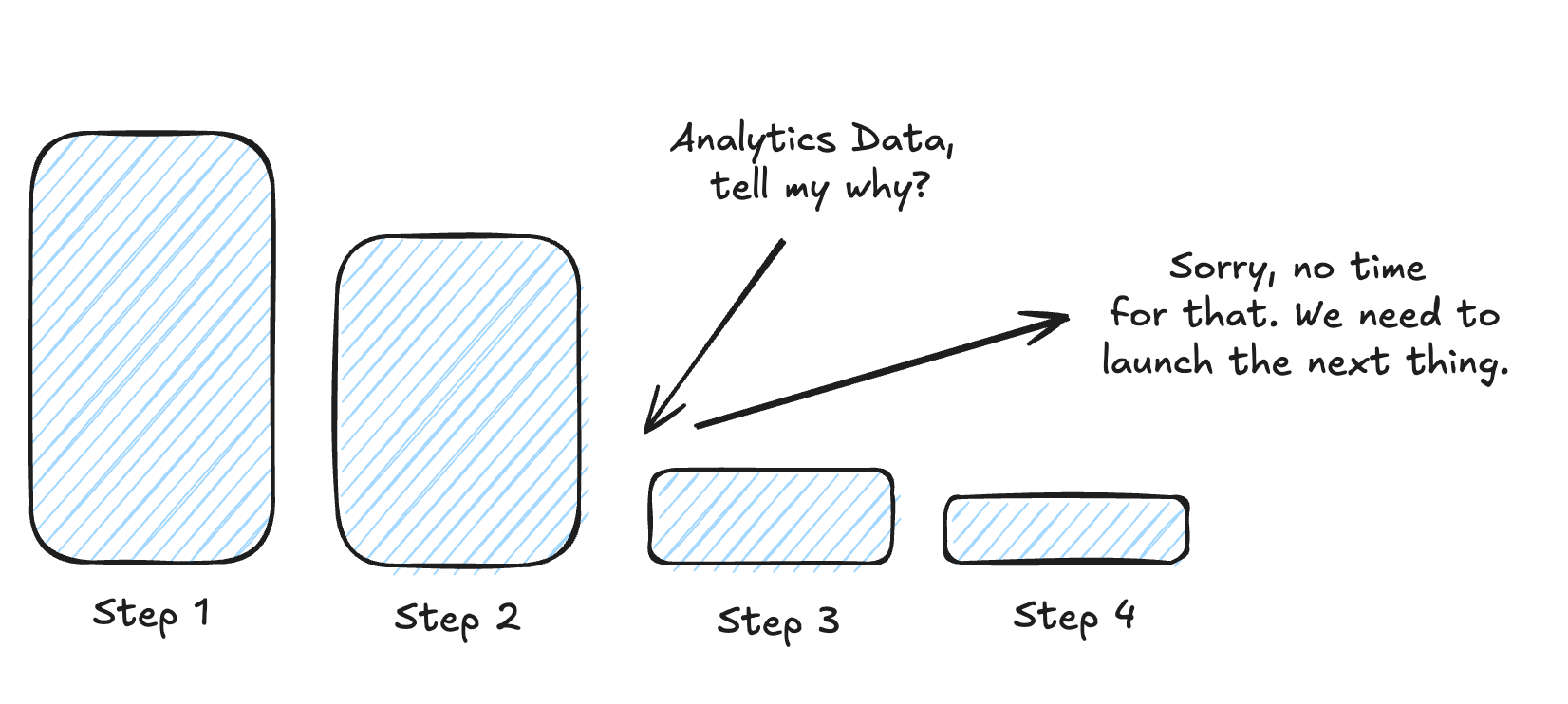

You'd discover that users dropped off at step 3 of your onboarding flow. But was it because step 3 was confusing? Because users weren't motivated enough to continue? Because they'd already gotten what they needed? Because you were targeting the wrong audience? The data showed you what was happening, but rarely why.

The more sophisticated the tracking became, the more this gap became apparent. You could slice and dice user behavior in infinite ways, but the path from insight to action remained frustratingly unclear. Companies would spend months implementing detailed analytics setups only to find themselves asking the same question: "This is all very interesting, but what should we actually do about it?"

The lean startup promise of rapid iteration based on data feedback loops worked for simple cases—A/B testing button colors or headlines. But for the complex questions that actually mattered to businesses—why users weren't converting, what features to build next, how to improve retention—the data rarely provided clear answers.

Instead, what digital analytics delivered was the feeling of being data-driven without the actual business impact. It gave teams something concrete to discuss in meetings, numbers to put in reports, charts to present to management. It made the messy, uncertain process of building products feel more scientific and controlled.

But underneath it all, most product and business decisions were still being made the same way they always had been: through intuition, customer feedback, market research, and educated guesswork. The analytics just provided a veneer of quantitative support for decisions that were fundamentally qualitative.

This wasn't entirely useless. Having some data was better than having no data. And occasionally, the insights were genuinely actionable. But the gap between what was promised and what was delivered was enormous—and almost everyone in the industry knew it, even if we didn't talk about it openly.

The Two Things That Actually Worked

Despite all the overselling and unfulfilled promises, digital analytics wasn't completely worthless. When I look back honestly at what actually delivered business value, two things stand out.

Marketing Attribution (The Real MVP)

Marketing attribution was the killer app of digital analytics. Full stop.

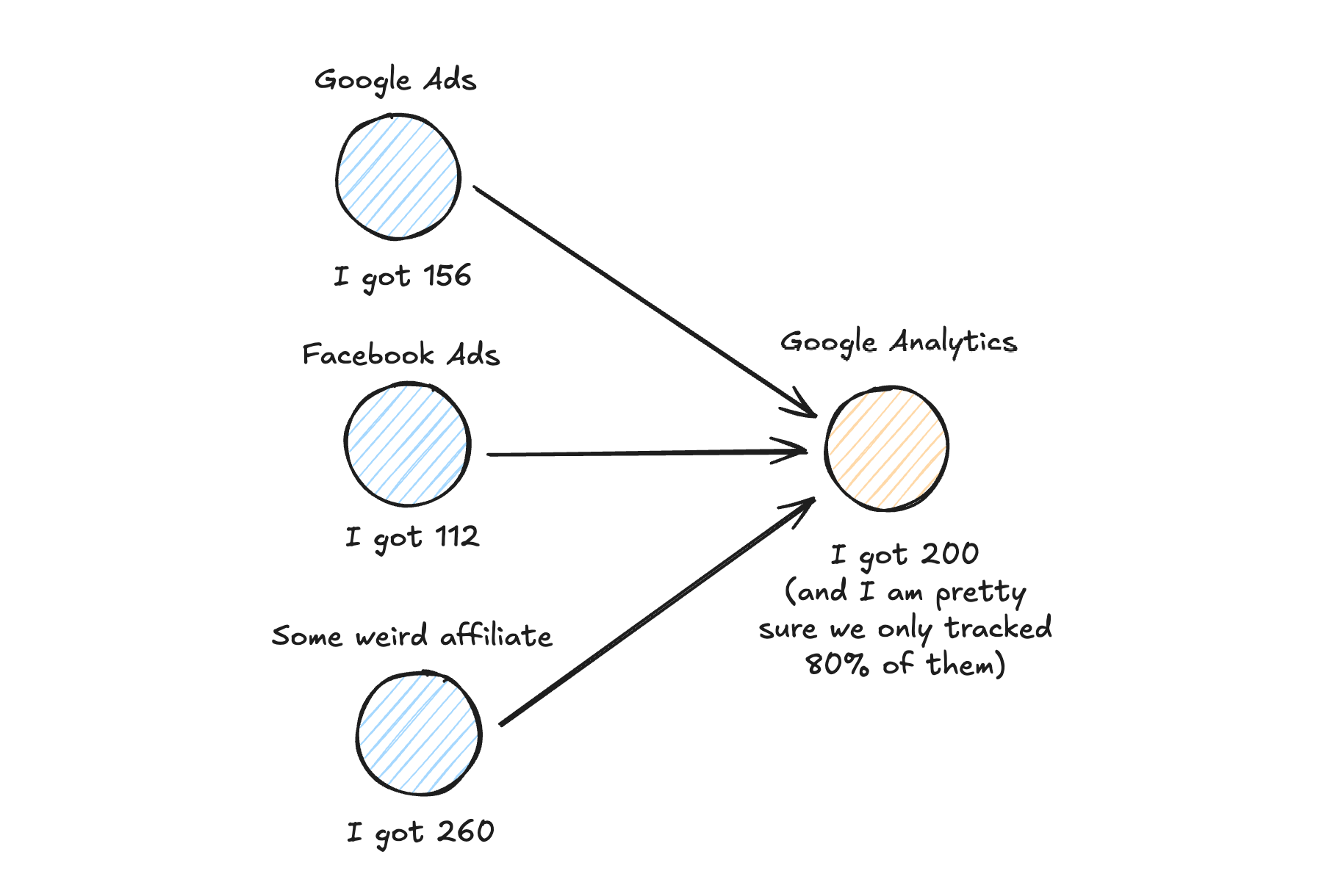

Before Google Analytics, if you ran digital ads on multiple campaigns, you were basically flying blind. You'd spend money on Google AdWords, some banner ads, maybe early Facebook campaigns. Each platform would claim credit for conversions, and you'd end up with 150% attribution because everyone was taking credit for the same sale.

Google Analytics solved this by being the neutral referee. It sat on your website, tracked users from all sources, and told you which marketing touchpoints actually led to conversions. For the first time, marketers could confidently say "this campaign drove 47 conversions, that one drove 12" without having to trust the advertising platforms' self-reported numbers.

This was genuinely revolutionary for marketing teams. It enabled proper budget allocation, campaign optimization, and ROI calculations. When your CFO asked why you needed that $50k marketing budget, you could show them exactly which channels were driving revenue. And the feedback was fast, just some days of data collection.

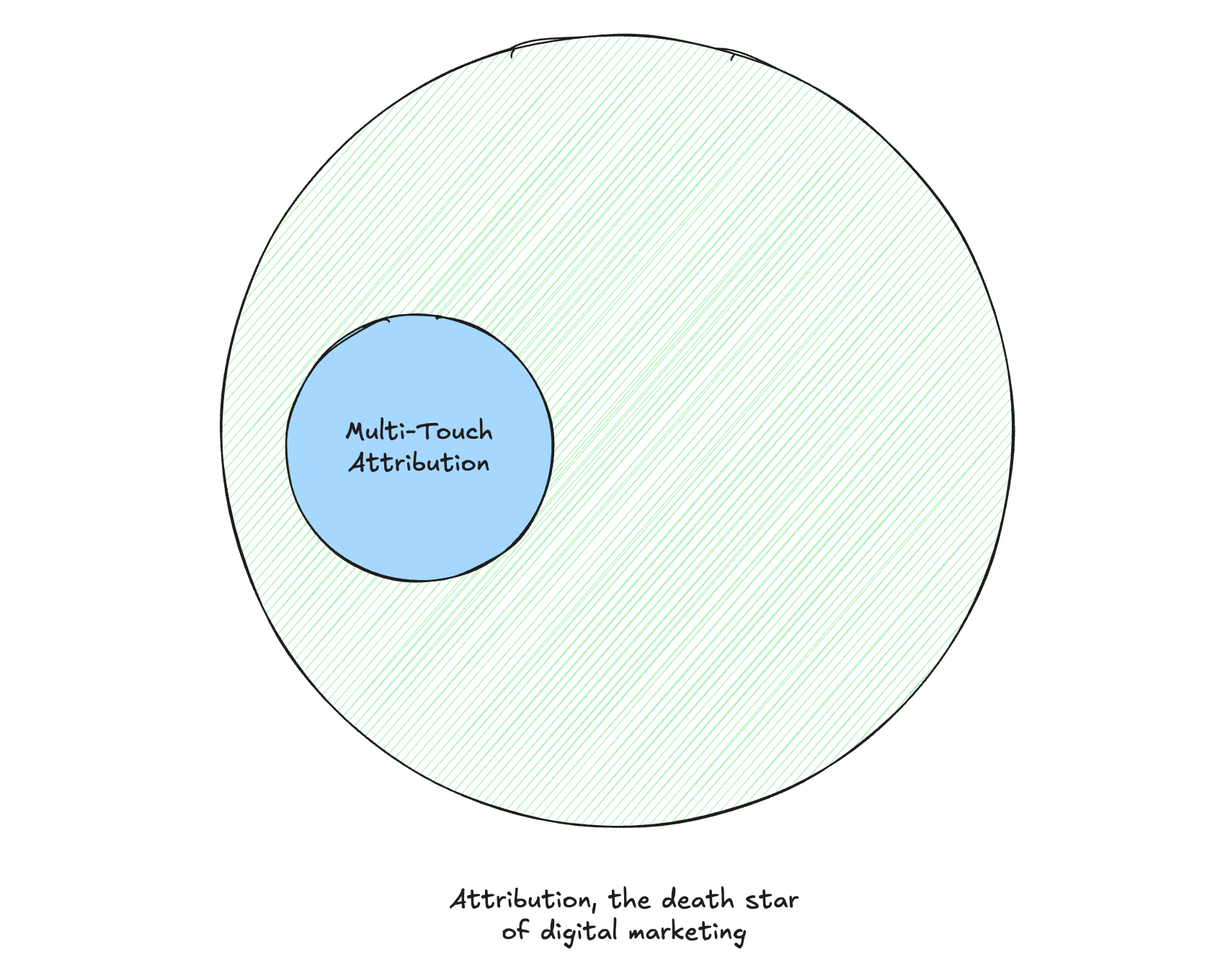

The attribution models got more sophisticated over time—first click, last click, time decay, position-based. Tools like Amplitude and Mixpanel eventually added multi-touch attribution that could track the entire customer journey across months of touchpoints (well, in theory at least). This was real, actionable data that directly impacted how businesses spent money.

Marketing attribution worked because it solved a concrete business problem: "Where should I spend my marketing budget?" The data quality might not have been perfect, but it was infinitely better than the alternative of blindly trusting advertising platforms or making gut decisions.

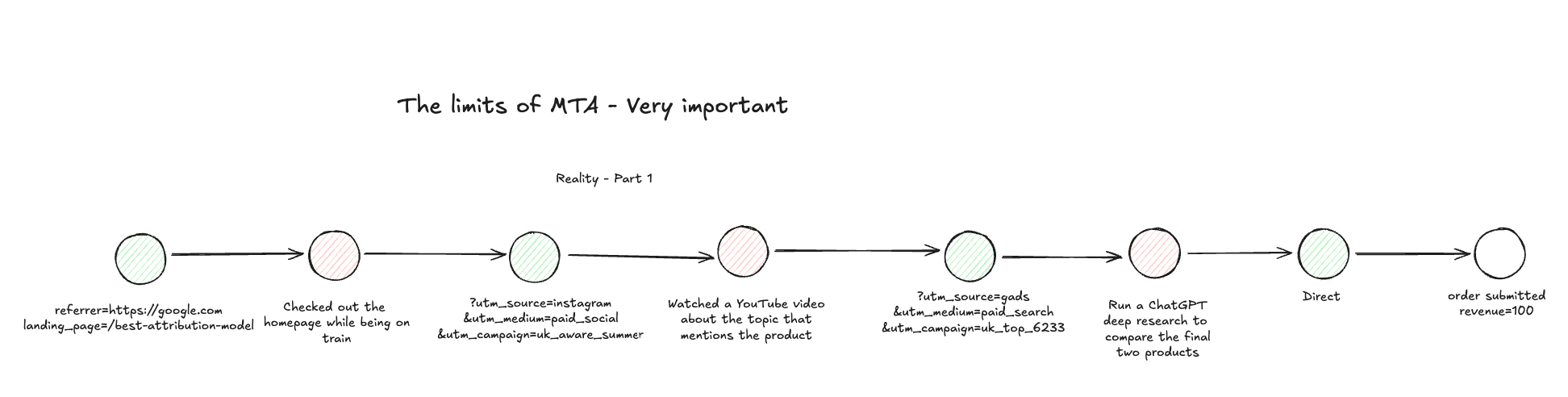

But over time multiple things happened - browsers blocked specific tracking, users did the same, then cookie consents did it, but what "destroyed" marketing attribution with Google Analytics was the way how marketing works today: cross multiple channels, including plenty that never create any immediate touchpoints on your website. A lot of direct and brand traffic and the uneasy feeling that this is all triggered by other things that were not tracked (LinkedIn posts, YouTube videos,...).

Shedding Light Into Dark Spaces (The Softer Win)

The second thing that worked was much less dramatic but still valuable: making the invisible visible.

Before analytics, you literally had no idea what was happening on your website. How many people visited? Which pages were popular? Where did people get stuck? It was all a black box. Analytics opened that box and showed you patterns you never could have guessed.

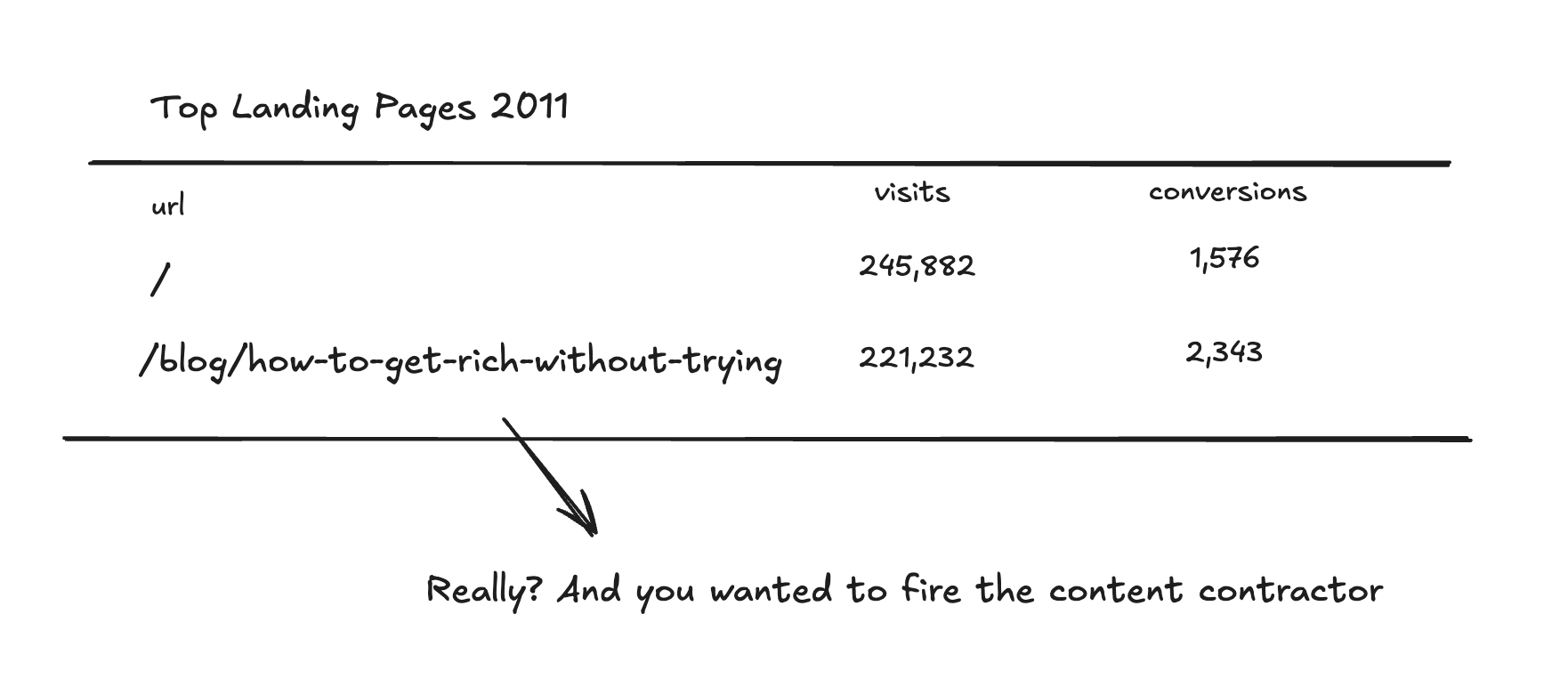

You'd discover that 40% of your traffic was going to a blog post you'd forgotten about. Or that users from mobile were behaving completely differently than desktop users. Or that your carefully designed homepage flow was being bypassed by 70% of users who came in through search and landed on product pages.

This "shedding light" value was real, even if it rarely led to immediate action. It changed how teams thought about their products and websites. It made abstract concepts like "user behavior" concrete and discussable.

UX designers could see which parts of interfaces were being ignored. Product managers could identify features that nobody was using. Marketing teams could spot traffic patterns they'd never noticed. Content creators could see which pieces resonated and which flopped.

The problem with this second category was that the insight-to-action gap was enormous. Learning that users spent an average of 2.3 minutes on your pricing page was interesting, but what were you supposed to do with that information? Was 2.3 minutes good or bad? Should you make the page shorter or longer? The data raised more questions than it answered.

But it still had value. It gave teams a shared vocabulary for discussing user behavior. It made conversations more specific and less speculative. Instead of arguing about whether users "probably" did something, you could look at the data and see what they actually did.

The Harsh Reality

Here's the uncomfortable truth: marketing attribution probably accounted for 80% of the actual business value that digital analytics delivered over the past 20 years. Everything else—all the sophisticated funnels, cohort analyses, user journey mapping, behavioral segmentation—was mostly the "shedding light" category. Interesting, sometimes useful, but rarely driving major business decisions.

Even in my own work, when clients pushed me to identify the specific business impact of our analytics implementations, it almost always came back to marketing attribution. That was where the rubber met the road, where data actually changed how money got spent.

This imbalance was the industry's dirty secret. We sold comprehensive analytics packages that promised to transform how companies understood their customers. But in practice, most of the value came from solving the much narrower problem of marketing measurement. The rest was elaborate window dressing that made the whole thing feel more important and scientific than it actually was.

Part II: The Foundation Is Cracking

The Collapse of Marketing Attribution

Barbara and I have been running marketing attribution workshops for over a year and a half now. We decided to do this because we both had the feeling that something was shifting significantly with attribution, and there was a lot of confusion about it.

What we discovered in our research was sobering: the role and possibilities of click-based attribution—the foundation of marketing analytics for two decades—is decreasing every year. And it's decreasing for reasons that go far deeper than the obvious culprits everyone talks about.

The Obvious Factors (That Everyone Mentions)

Yes, consent requirements in Europe matter. When you have to ask users for permission to track them, you immediately limit your ability to connect marketing touchpoints to conversions. Apple's anti-tracking initiatives have been devastating for attribution, especially on mobile where they have dominant market share. And there's now a whole cottage industry of consultants promising to get you "a little bit more data back" through server-side tagging and other technical workarounds.

But these technical and regulatory changes are just symptoms of a much bigger shift.

The Real Problem: Marketing Has Evolved Beyond What Attribution Can Handle

The fundamental issue is that marketing itself has changed dramatically, while attribution tools are still built for a world that no longer exists.

In the early days of ecommerce, digital marketing was relatively simple. You ran most of your campaigns on Google Ads (Adwords at the time) because search was the dominant way people discovered products. Maybe you added Facebook when social advertising became viable. You had two channels, maybe three, and attribution could realistically capture 70% of the customer journey because there weren't that many touchpoints to track.

Today's marketing is exponentially more complex. You can't design a successful marketing strategy around just paid search and paid social anymore. Companies run campaigns across dozens of channels: influencer partnerships, podcast sponsorships, YouTube content, LinkedIn thought leadership, community building, email sequences, retargeting campaigns, affiliate programs, PR initiatives, and SEO content strategies.

The more channels you operate across, the harder attribution becomes. When someone watches an impactful YouTube video you created, gets retargeted on Instagram, reads your newsletter, sees your founder speak at a conference, and then finally converts—how do you attribute that sale? Traditional attribution systems can maybe capture two or three of those touchpoints if you're lucky.

The Black Box Takeover

Here's where it gets really problematic: advertising platforms have largely given up on deterministic attribution too. They've moved to probabilistic models—black boxes that use machine learning to estimate conversions and optimize campaigns automatically.

Google Ads and Facebook Ads now essentially say "trust us, our AI knows what's working" rather than providing granular data about which specific touchpoints drove conversions. They've shifted to broad targeting and automated bidding strategies where you hand over control to their algorithms and hope for the best.

This fundamentally changes the relationship between marketers and data. Instead of using attribution data to fine-tune campaigns, marketers are increasingly just setting broad parameters and letting platform AI handle the optimization. The detailed attribution analysis that justified tools like Google Analytics becomes less relevant when you're not making granular targeting decisions anymore.

The Brutal Reality Check

Here's a (n educated guessed) number that could terrify anyone in the analytics industry: from all the analytics setups I know, maybe 10% are actually used to make good marketing analytics decisions.

Most companies still have Google Analytics or Amplitude running. They still generate monthly reports showing visits, conversions, and channel performance. But when you dig into how marketing budgets actually get allocated, how campaigns actually get optimized, and how strategic decisions actually get made, the attribution data plays a much smaller role than it used to (or a too big one based on the sample sizes).

The rest is just data theater—numbers that get presented in meetings to make discussions feel more scientific, but don't fundamentally change what anyone does.

Marketing attribution was the one part of digital analytics that consistently delivered business value. It was the foundation that justified the entire industry. And now it's crumbling, not because the technology failed, but because the marketing landscape evolved beyond what attribution systems can meaningfully measure.

When the main thing that actually worked stops working, everything else becomes much harder to justify.

The Google Analytics 4 Disaster

GA4 remains a mystery to me. I made a video a long time ago trying to explain why GA4 ended up the way it did, but the gist is this: it looks like GA4 is a product that was trying to serve 2-3 completely different strategies, and that definitely didn't work out well in a product.

Maybe it's not even that complex. Maybe GA4 just reflects that Google Analytics doesn't play a significant role for Google anymore, and the decision was made that GA could be just a good entry product for getting people onto Google Cloud Platform and BigQuery. This would totally fit Google Analytics' history, since it was always a bit of an entry product for a different offering. Initially, Google Analytics was the sidekick of Google Ads. Now maybe Google Analytics 4 is the sidekick of Google Cloud Platform.

Abandoning the Core Audience

But here's what's fascinating about what happened in the marketer space: Google basically abandoned their default audience.

For everyone who worked in marketing, Google Analytics was the default tool. It was the thing every marketer knew they had to invest time in understanding, at least the basics, to do their job properly. You'd learn how to set up goals, understand acquisition reports, and work with the basic attribution models. It wasn't perfect, but it was predictable and learnable.

One thing you always have to keep in mind, which I think is often forgotten in the analytics space, is that marketers have a lot of things to do. Analytics and analyzing performance is just a small part of their job. They need tools that work out of the box and don't require extensive training to get basic insights.

Google Analytics Universal was exactly that. It had a very opinionated and strict model for doing things, but that's what made it work so well. The constraints actually helped—marketers knew where to find things, how to interpret the data, and what the numbers meant.

The Migration Nightmare

Then came the GA4 migration, which wasn't really a migration at all. Google forced everyone to implement completely new tracking code, learn a entirely different interface, and adapt to a fundamentally different data model. This was essentially a "rip and replace" project disguised as an upgrade.

For a tool that was supposed to be accessible to everyday marketers, this was devastating. The new interface was confusing. Basic reports that took two clicks in Universal now required navigating complex menus or building custom reports. Simple concepts like "sessions" were replaced with more abstract event-based models that required technical understanding to interpret correctly (tbh - I loved the move to an event-based user-focussed model, but for other reasons).

The timing made it worse. Google announced the Universal sunset with barely 18 months' notice, forcing teams to scramble. Many companies spent months or years getting their Universal Analytics setup just right, only to be told they had to start over from scratch.

A Product Without a Clear User

The most damaging thing about GA4 is that it's unclear who it's actually built for. The interface is too complex for casual marketers but not powerful enough for serious analysts. The reporting is too limited for agencies but too overwhelming for small business owners. The data model is more flexible than Universal but harder to understand for non-technical users.

It feels like Google tried to build one product that could serve everyone and ended up serving no one particularly well. Universal Analytics succeeded because it had clear constraints and made specific trade-offs. GA4 feels like a compromise that satisfies no one's needs completely.

The Opportunity This Created

Google's misstep created a massive opportunity for tools like Amplitude and Mixpanel. Suddenly, there were millions of frustrated marketers who had to relearn analytics anyway. If you're going to invest months learning a new tool, why not learn one that actually solves your problems instead of creating new ones?

This is exactly the market opportunity that Amplitude is going after with their evangelist hire. They're positioning themselves as "Google Analytics, but actually good"—the tool for marketers who have outgrown GA4's limitations and have budget for something better.

The GA4 disaster proved that the market was ready for professional-grade marketing analytics tools. Google had essentially trained an entire generation of marketers to expect more from their analytics, then delivered a product that provided less. That gap is what Amplitude, Mixpanel, and others are trying to fill.

The Final Crack in the Foundation

The combination of attribution collapse and the GA4 disaster created a perfect storm. Just as the main value proposition of digital analytics (attribution) was becoming less reliable, the dominant platform that millions of teams depended on was imploding.

This left the entire digital analytics ecosystem in chaos. Teams that had been running on autopilot with Universal Analytics suddenly had to make active decisions about their analytics strategy. And when they looked honestly at what they were getting from their current tools, many realized they'd been going through the motions for years without much actual business impact.

GA4 didn't just fail as a product—it forced an entire industry to question whether traditional digital analytics was still worth the effort (tbh - I am exaggerating here a bit - most teams swallowed the toad (not even sure if you say it like that in English - but that's a German saying) and just implemented GA4 and did nothing with it like before).

Part III: Two Paths Forward

Digital analytics will still tag along for a long time. Things rarely disappear quickly—usually they just stick around because people have invested in them. But I'm seeing two distinct directions where the real activity is moving.

Both have their origins in the old marketing and product analytics world, but they represent fundamental shifts in what we're trying to accomplish. One direction is highly operational and immediate. The other is more sophisticated and strategic. And interestingly, they're attracting completely different audiences than traditional analytics ever did.

This is where it gets interesting.

Path 1: Customer Experience Optimization

Marketing Teams Need Speed, Not Deep Analysis

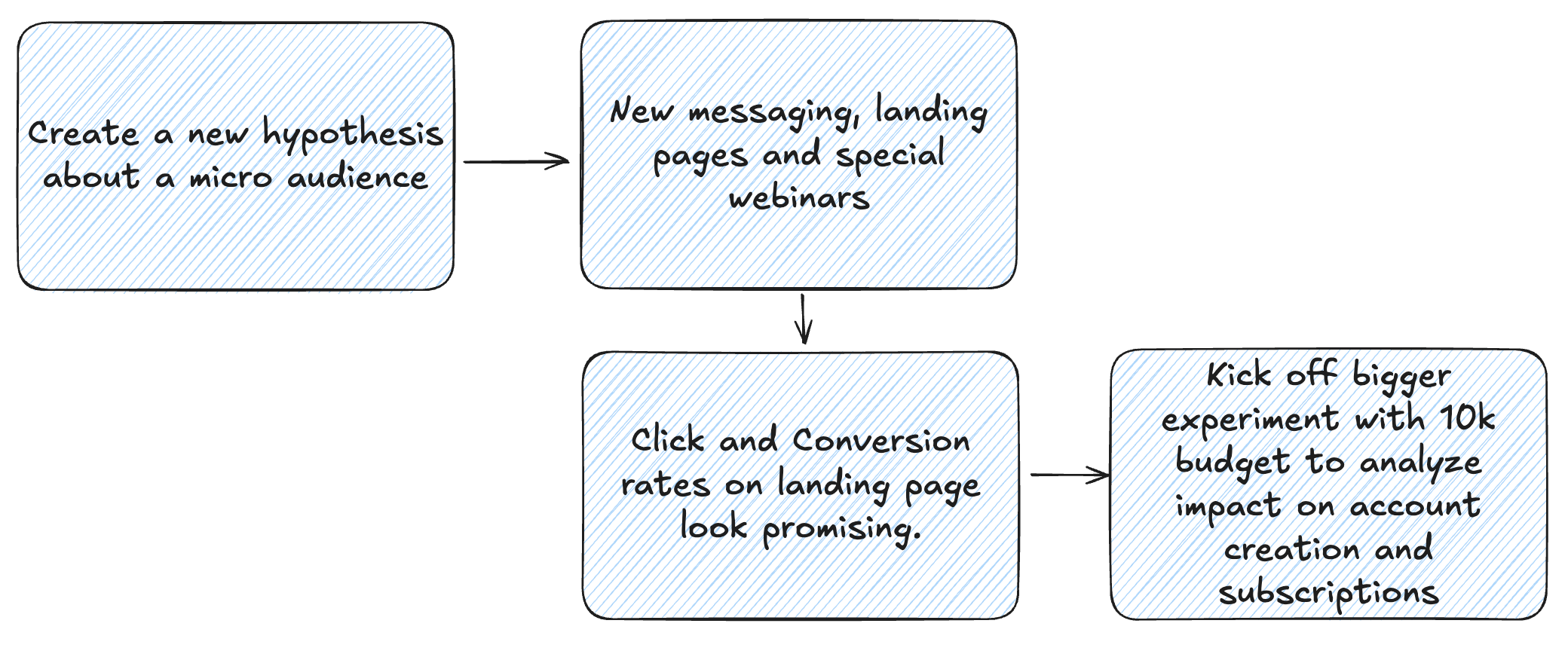

One characteristic of marketing teams that often gets overlooked: marketing is (or can be) highly operational and fast-moving. What I mean is this: marketing teams want to experiment constantly, implement quickly, and see results within days or weeks, not months.

When you look at the experimentation space, it's interesting how differently product teams and marketing teams approach testing. Product teams will run careful A/B tests on new features, analyzing user behavior over weeks or months to understand the impact. But they're often working with relatively low volumes—maybe a few thousand users see a new feature, and you need time to gather meaningful data.

Marketing experimentation is completely different. You can test new ad creative and get results within hours. You can launch a new email campaign to 50,000 subscribers and know by the end of the day whether it worked. You can experiment with different landing pages, messaging approaches, targeting parameters, and budget allocations across multiple channels simultaneously.

The volume and speed of feedback loops in marketing is extraordinary. The best digital marketing teams I've worked with had high rates of experimentation built into their DNA. They were constantly testing new channels, new messaging, new campaign structures. They wanted to know immediately whether something was working so they could either scale it up or kill it and move on to the next test.

This creates a fundamentally different relationship with data than what traditional analytics provided. Marketing teams don't need sophisticated cohort analyses or complex user journey mapping. They need to know: did this campaign drive more conversions than that one? Which landing page performed better? More local insights, than complex big picture data.

What marketing teams have always needed is operational analytics—data that directly enables action within their day-to-day workflows. They want to create a segment of users who visited the pricing page but didn't convert, then immediately send those users a targeted email or show them specific ads. They want to identify which blog posts are driving the most qualified traffic and create more content like that. They want to see which campaigns are underperforming and pause them before they waste more budget.

The AI Acceleration Factor

With AI capabilities expanding, this operational speed is going to increase dramatically. Marketing teams will be able to generate multiple versions of ad creative, test different messaging approaches, and optimize campaigns in real-time with minimal human intervention.

This amplifies the need for analytics that can keep up with the pace of iteration. When you're testing dozens of variations simultaneously and making daily optimization decisions, you can't wait for weekly reports or manual analysis. You need systems that automatically surface insights and enable immediate action.

The gap between "here's what the data shows" and "here's what you should do about it" needs to shrink to zero. Marketing teams operating at AI speed won't have time for the traditional analytics workflow of data exploration, insight generation, and then separate implementation phases. They need integrated systems that combine analysis and action in the same interface.

This is why the future of marketing analytics looks not like the traditional digital analytics we've known. It's not about better charts or more sophisticated analysis—it's about making data operationally useful within fast-moving workflows that are only getting faster.

From Analytics to Action: The Amplitude Evolution

When Amplitude started moving into the marketing space, they did the obvious first step: feature parity with Google Analytics. They added attribution capabilities, channel grouping, and ecommerce functionalities. With cart analytics introduced about three years ago, they were essentially saying "we can do everything GA does, but better."

This made sense strategically. There were millions of frustrated GA4 users who needed to migrate anyway, so why not offer them a more powerful alternative? But achieving feature parity was just the entry ticket. The real evolution happened when they started thinking about what comes after analytics.

The CDP Experiment

Amplitude experimented with becoming a Customer Data Platform (CDP). The logic was sound: if you can identify interesting user segments in your analytics data, why not enable users to immediately act on those insights by sending targeted messages via SMS, email, or ad platforms?

They quickly learned that building CDPs is complex work. Anyone who's worked with identity matching and identity graphs knows this pain. You can see they've scaled back from the full CDP vision—they still offer basic activation capabilities, but they've strengthened their integrations with dedicated CDP tools like Segment rather than trying to replace them entirely.

But this experiment taught them something important: the gap between insight and action was where the real value lived.

The AI Agent Approach

Amplitude's second AI product launch was much more interesting than their first (which was the obvious "chat with your data" feature that everyone shipped). They introduced an AI agent that continuously analyzes your data, identifies improvement opportunities, and most importantly, connects those opportunities to specific actions you can take.

Instead of just telling you "many users drop off during onboarding step 3," the AI suggests concrete changes you could make to that step. It might recommend specific messaging tweaks, identify friction points that could be streamlined, or suggest A/B tests that could improve conversion rates.

This is a fundamental shift from traditional analytics. You're not getting reports that require interpretation—you're getting actionable recommendations that are ready to implement. The analysis and the suggested action are bundled together.

A thought experiment: Analytics + CMS + AI

This approach could go much further than what Amplitude has built so far. Imagine connecting your analytics platform to your content management system with AI-generated messaging capabilities.

You could have a content pool designed for your main personas across different use cases. Based on real-time analytics data, the system identifies that a visitor appears to match persona A and seems interested in use case B. The platform then dynamically generates and serves content optimized for that specific combination.

Instead of static websites with fixed messaging, you'd have adaptive experiences that evolve based on user behavior patterns, continuously optimized by AI, and informed by real-time analytics data. The feedback loop between data collection, analysis, and content optimization would happen automatically within minutes instead of months.

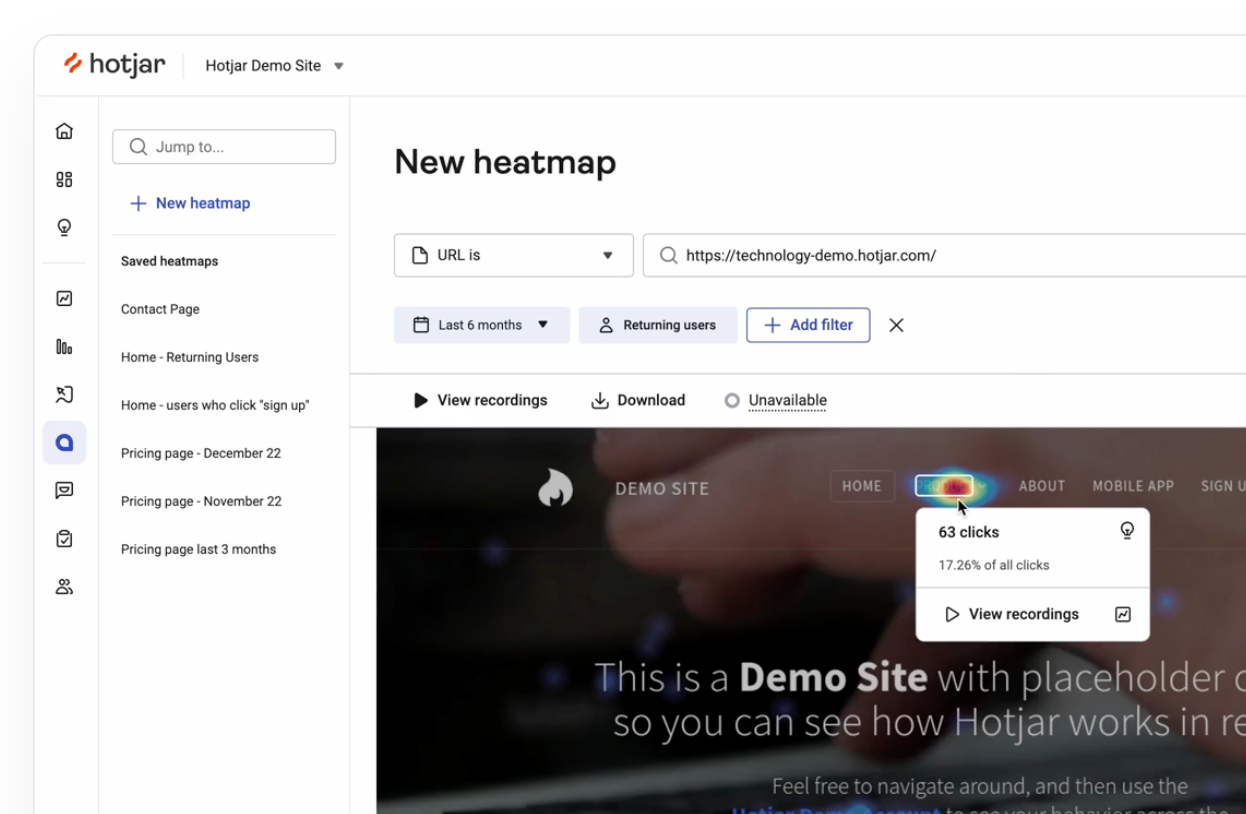

The Hotjar Pattern: Why Simple Beats Sophisticated

Hotjar was always a weird tool for me. I'd encounter it constantly in client setups, running alongside Google Analytics, and I'd think: "Why do teams need this when GA already has low value, and Hotjar feels even more limited?"

But I was missing the point entirely.

What Hotjar Got Right

Hotjar solved one specific problem extremely well: it made user behavior immediately visible and actionable. You could see a click map overlaid on your landing page within minutes of implementation. You could watch session replays of actual users interacting with your site. You could set up simple surveys that appeared at key moments in the user journey.

The data wasn't sophisticated. The insights weren't groundbreaking. But the feedback loop from question to answer was incredibly fast. A UX designer could see exactly where users were clicking, identify obvious friction points, and make immediate changes. A marketer could watch recordings of users struggling with the checkout flow and spot conversion killers within hours.

While I was judging Hotjar for lacking analytical depth, UX designers were using it to make their interfaces better every day. They weren't doing complex behavioral analysis—they were seeing patterns that helped them solve specific problems quickly.

The ContentSquare Acquisition

Hotjar's acquisition by ContentSquare validates this approach on an enterprise scale. ContentSquare has been the leader in customer experience optimization for years, but primarily at the enterprise level with enterprise-level complexity and pricing.

The customer experience optimization space was always missing something below the enterprise tier. These enterprise solutions felt like classic sales-driven products—not attractive by default, only valuable after a sales team convinced a CMO they needed the "magic" capabilities.

ContentSquare acquiring Hotjar (and Heap after that) gives them access to the bottom-up market that Hotjar had built. Suddenly, customer experience optimization isn't just for enterprises with six-figure budgets and dedicated teams. It's accessible to any company that wants to understand how users actually interact with their interfaces.

Why This Pattern Matters

The Hotjar pattern reveals something important about the direction analytics is moving. Users increasingly prefer tools that are:

- Immediately actionable rather than analytically sophisticated

- Workflow-integrated rather than requiring separate analysis phases

- Problem-specific rather than trying to serve every possible use case

- Fast feedback loops rather than comprehensive data collection

Amplitude has clearly learned from this pattern. They're building features that feel more like Hotjar—click maps, auto-tracking capabilities, and immediate visual feedback—rather than just adding more analytical complexity.

The future of customer experience optimization isn't about more sophisticated analysis. It's about making user behavior visible and actionable for the people who can immediately improve the experience. Sometimes the simpler tool that solves one problem well beats the sophisticated platform that solves many problems adequately.

This is the operational direction that digital analytics is moving toward: less analytical depth, more immediate utility. Tools that help teams make better decisions faster, rather than tools that help analysts generate more comprehensive reports.

Interestingly, this makes this space less interesting for me. Which is totally fine.

Path 2: Revenue Intelligence

The Audience Shift: From Product Teams to Revenue People

About 16 months ago, something changed in the type of projects I was getting approached for. I'd spent years working with product teams on classic product analytics—helping them set up tracking, build funnels, understand cohort reports, and get insights from their behavioral data.

But I started getting contacted by a completely different profile: revenue people. CFOs, Chief Revenue Officers, heads of growth or the data teams working for them. And they were asking fundamentally different questions.

"We Only See Revenue as a Post-Fact"

Their problem was consistent across companies: "Right now, we're looking at revenue as a post thing. We see the sales numbers for a specific week or month in our BI reports. We can break it down by product, maybe by marketing channel. But that's it. We don't have much to work with for planning or improving our revenue."

This was interesting to me because these were the people who actually controlled budgets and made strategic decisions about company growth. And they felt blind to the leading indicators that could help them understand how revenue gets generated.

They wanted to see beyond the final numbers. Questions like: "How many accounts do we lose because we never activate them properly? How much revenue potential are we leaving on the table? When we see usage patterns declining, can we predict churn risk before it shows up in cancellations?"

The Demand for Early Intervention Signals

What struck me was how different their needs were from traditional product analytics. Product teams wanted to understand user behavior to improve features. Revenue people wanted to understand the entire pipeline from first touch to recurring revenue, with the ability to intervene before problems became expensive.

They needed predictive signals, not just historical reports. They wanted to identify accounts at risk of churning before the churn happened. They wanted to spot revenue expansion opportunities while there was still time to act on them. They wanted to understand which early user behaviors actually predicted long-term value.

Most importantly, they wanted to combine different data sources that had never been connected before. They had rich behavioral data from their product, subscription data from their billing systems, and marketing data from their campaigns. But these lived in separate systems and were never analyzed together in a way that could predict business outcomes.

Why Product Teams Weren't Enough

This shift made sense when I thought about it. Product teams, despite all the talk about being data-driven, often struggle to connect their work directly to business outcomes. They can tell you that Feature X has 40% adoption, but translating that into revenue impact requires assumptions and indirect measurements.

Revenue people don't have that luxury. They're accountable for actual business results, not engagement metrics or feature adoption rates. They need to understand the complete customer journey from acquisition through expansion and renewal, with clear visibility into where revenue gets created or lost.

The audience shift I experienced reflected a broader recognition that behavioral data is most valuable when it's connected to business outcomes, not when it's analyzed in isolation. Product analytics was always a step removed from the business metrics that executives actually cared about. Revenue intelligence closes that gap.

The Budget Reality

There's also a practical element: revenue people control bigger budgets and have more urgency around their problems. A CFO who can't predict churn risk or identify expansion opportunities is dealing with million-dollar blind spots. A Chief Revenue Officer who can't see early indicators of pipeline health is flying blind on the most important part of their job.

Product teams often struggle to justify analytics investments because the ROI is indirect and hard to measure. Revenue teams can directly calculate the value of better forecasting, earlier churn prediction, and improved conversion tracking. When you can prevent one high-value customer from churning, the analytics investment pays for itself.

This audience shift signals something important about where the analytics industry is heading. The future belongs to tools that can directly connect user behavior to business outcomes, not tools that provide interesting insights about user behavior in isolation.

Breaking Free from SDK Limitations

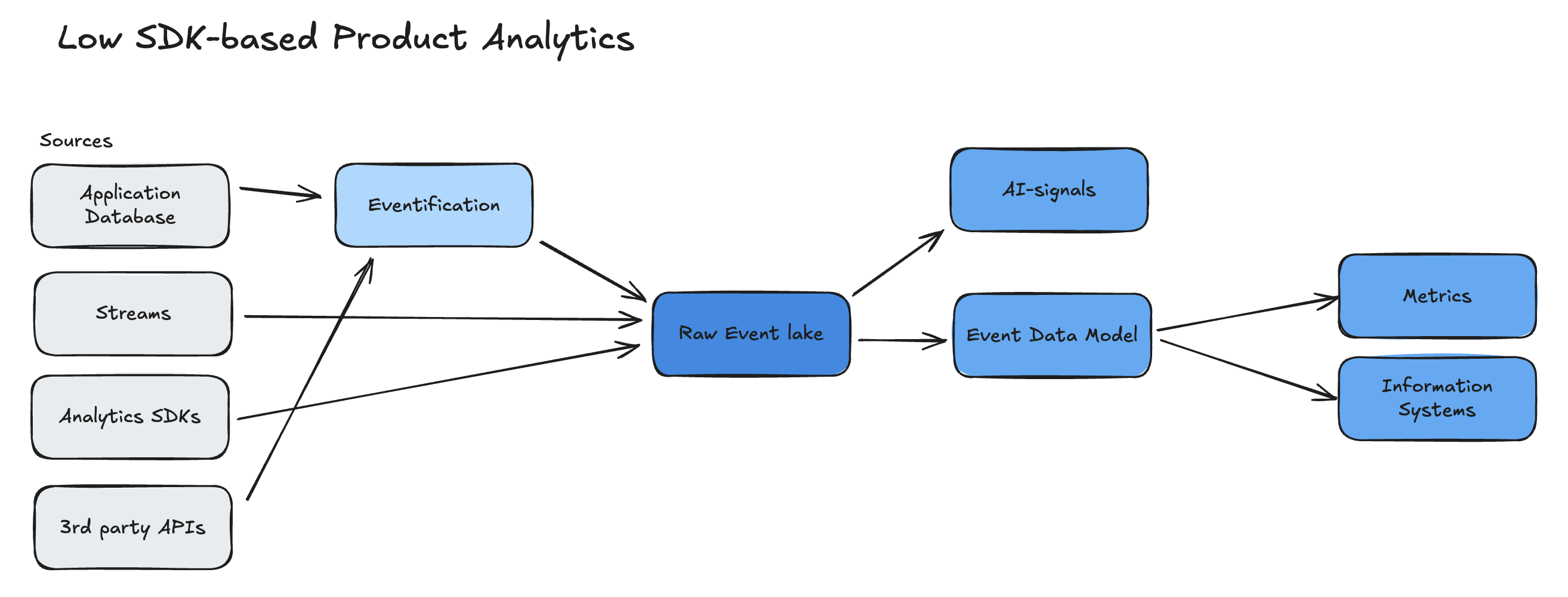

The breakthrough moment for me came when I realized that what I wanted to achieve wasn't possible with SDK-based tracking systems anymore. This was the essential step to understanding how to bridge the gap between user behavior and business outcomes.

The Fundamental Problems with SDK Tracking

SDK-based tracking means sending events from the browser or server using tracking libraries. You implement code that fires events when users perform actions, and those events get sent to your analytics platform. This approach has severe limitations when you're trying to build serious business intelligence.

First, you never have guaranteed 100% data delivery. Networks fail, browsers crash, users navigate away before events finish sending. The worst projects I worked on were the ones where we tried comparing analytics numbers to actual business data—like comparing "account created" events in Amplitude to actual account records in the database. The numbers never matched because tracking isn't designed for guaranteed delivery.

Second, SDK tracking requires significant implementation and maintenance work from developers. And here's the problem: tracking isn't their main job. Their main job is building the product. So you constantly hit this tension where data quality depends on developer time and attention, but developers have other priorities. The tracking setup slowly degrades over time as features change and tracking code doesn't get updated.

The Data Warehouse Approach

The solution was to approach this like a classic data project instead of a tracking project. Treat different data sources as inputs, bring them together in a data warehouse, apply a well-designed data model, and then create the metrics and insights that different teams need.

This meant getting off the platforms and building everything in the data warehouse where I could control data quality, combine multiple sources, and ensure complete data capture.

In my current project, we track only 2-3 events with SDKs. Everything else—10-15 additional events—comes from other sources: external systems, application databases, webhook data. We identify the information we need from existing data sources and transform it into event data rather than trying to track everything through code.

The Technical Benefits

This approach solves multiple problems simultaneously:

Complete data capture: When you pull events from database records, you get 100% coverage. Every account creation, subscription change, or product interaction gets captured because it has to be recorded in the database for the application to function.

Better data quality control: You can run data quality tests, monitor for anomalies, and fix issues retroactively. If you discover a problem with how you defined an event six months ago, you can reprocess the historical data. Try doing that with SDK-based tracking.

Sophisticated identity resolution: You can spend time properly stitching accounts across different systems. When you have HubSpot data and product usage data, you can determine exactly how well they match and what percentage of accounts you can connect. It becomes an engineering problem with measurable solutions, not something you just hope works.

Retroactive changes: You can create new events from historical data, add calculated properties to existing events, or fix data quality issues. This is impossible with SDK tracking but trivial in a data warehouse.

Beyond Event Analytics

But the bigger breakthrough is that this approach enables you to combine behavioral data with all the other business data that traditional analytics never touched. You can connect product usage patterns to subscription changes, marketing attribution to customer lifetime value, and support ticket volume to churn risk.

When you build everything in the data warehouse, you can create synthetic events that represent business outcomes rather than just user actions. You can generate events like "account became at-risk" or "expansion opportunity identified" based on complex logic that combines multiple data sources.

This is where revenue intelligence becomes possible. You're not limited to analyzing what users clicked or which pages they visited. You can analyze the complete customer journey from first marketing touch through renewal and expansion, with all the business context that makes behavioral data actually meaningful.

The SDK approach kept digital analytics trapped in a world of user actions without business context. Moving to the data warehouse approach finally enables you to connect user behavior to business outcomes in a rigorous, measurable way.

Building the Assembly Line to Revenue

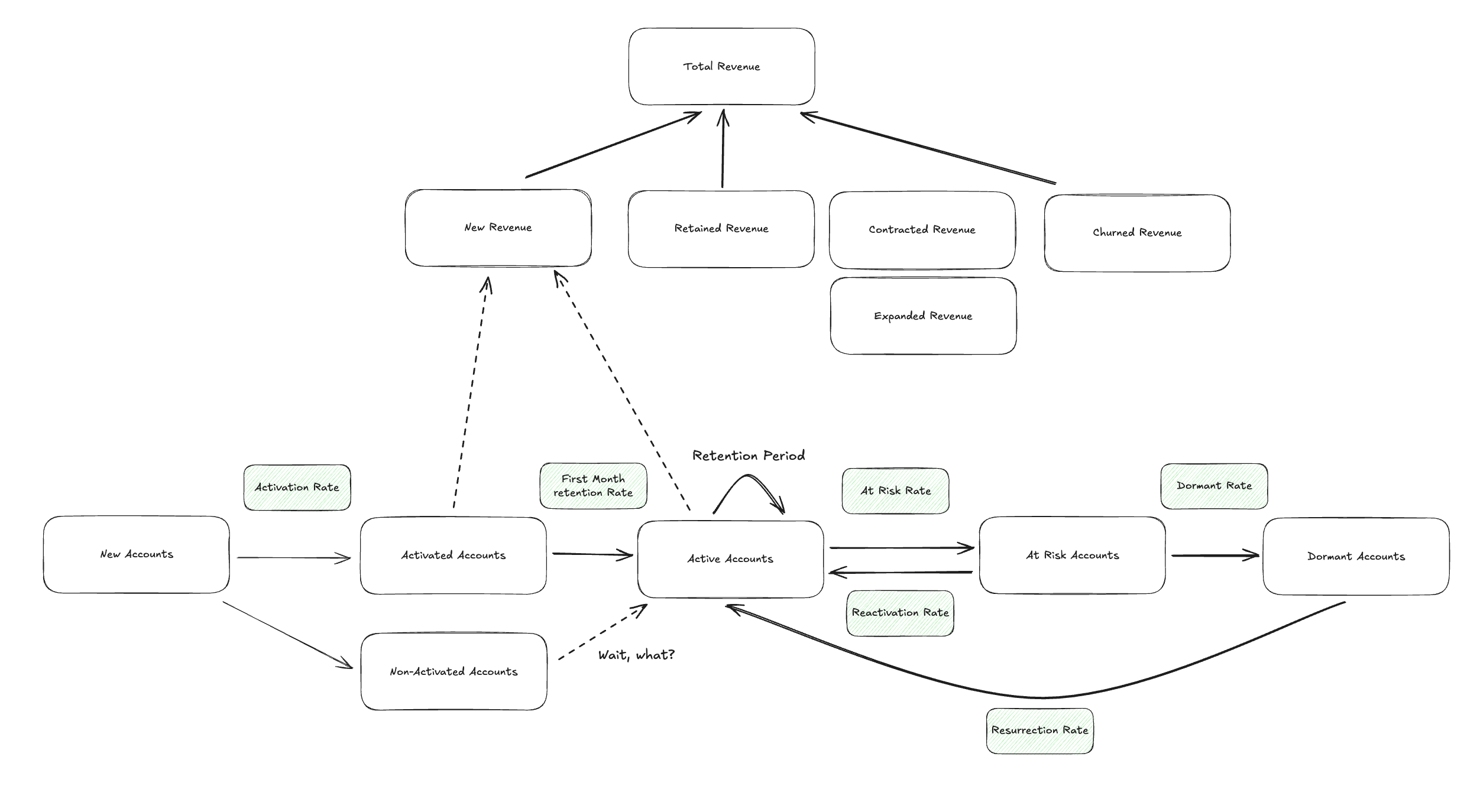

This is where revenue intelligence becomes practical. Instead of looking at revenue as a mysterious black box that either goes up or down, you can map out the entire assembly line that produces it—and identify exactly where the breakdowns happen.

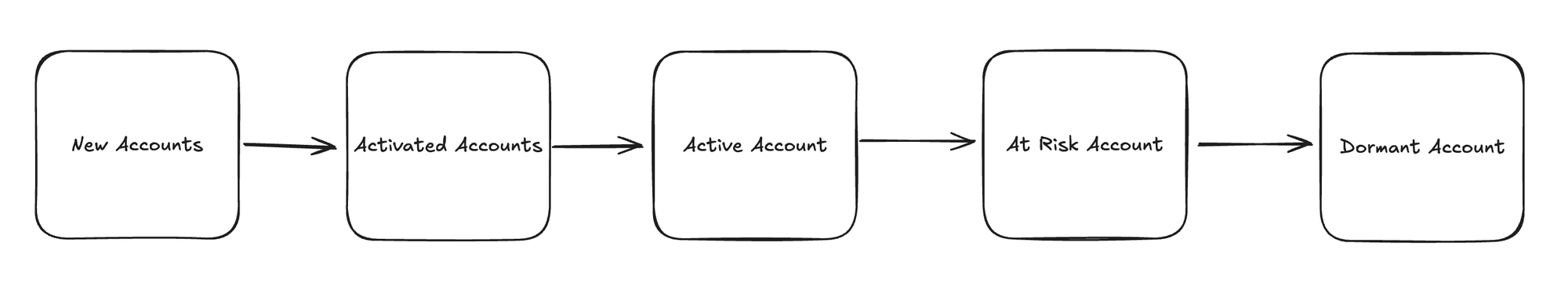

The Pipeline That Actually Matters

Let me give you a concrete example. You're a SaaS company that gets 1,000 new accounts this month. Without revenue intelligence, you'd track this as "1,000 new signups" and maybe celebrate the growth. Three months later, you'd see that very few of them converted to paid subscriptions, but you wouldn't know why.

With revenue intelligence, you track the complete pipeline: 1,000 new accounts, but only 100 reach an "activated" state—meaning they've done something in your product that gives them a real sense of what value it can deliver. That means you're losing 900 accounts before they even understand what they signed up for.

This isn't just an interesting metric. It's a business emergency with a clear dollar value attached. You can calculate exactly how much revenue potential you're losing by failing to activate 90% of new accounts. More importantly, you can start investigating why activation is so low and test interventions to improve it.

Early Intervention Opportunities

Traditional business intelligence tells you what happened after it's too late to fix it. Revenue intelligence gives you signals when you can still do something about it.

When you see an account's usage declining, you can flag them as at-risk before they actually churn. When you identify patterns that predict expansion opportunities, you can reach out while customers are still receptive. When you spot activation problems, you can fix the onboarding experience before you lose more potential customers.

This is fundamentally different from the "post-fact" reporting that revenue teams complained about. Instead of looking at last month's churn numbers and wondering what went wrong, you're getting alerts about accounts that might churn next month—while there's still time to save them.

Connecting the Dots

The real power comes from connecting behavioral patterns to business outcomes across the entire customer lifecycle. You can see that accounts who complete specific onboarding actions within their first week have 3x higher lifetime value. You can identify that accounts from certain marketing channels take longer to activate but have better retention once they do.

This kind of analysis was impossible with traditional analytics because you couldn't connect the behavioral data to the business outcomes data in a meaningful way. Revenue intelligence bridges that gap by building everything in the data warehouse where you can combine subscription data, product usage data, marketing attribution data, and support interaction data into a single model.

The Metric Structure That Explains Growth

What you end up with is a metric tree or growth model that explains how revenue gets generated rather than just measuring how much revenue you got. You can see the conversion rates at each stage of the actual customer journey: from visitor to account to activated user to trial subscriber to paying customer to expanded account.

When revenue growth slows down, you can immediately identify which part of the pipeline is broken. Are you getting fewer new accounts? Is activation declining? Are paying customers churning faster? Each problem has different solutions, but you can only fix what you can see.

This diagnostic capability is what revenue teams have been missing. They could see the symptoms (revenue growth slowing) but not the underlying causes.

Beyond Traditional Analytics

This approach solves the fundamental problem that always limited digital analytics: the disconnect between user behavior and business impact. Traditional analytics could tell you that users clicked buttons and visited pages, but couldn't tell you which of those actions actually mattered for the business.

Revenue intelligence flips this around. It starts with the business outcomes that matter—revenue, retention, expansion—and works backward to identify which user behaviors actually predict those outcomes. Instead of measuring everything and hoping some of it is important, you measure the things that demonstrably drive business results.

The difference is profound. Revenue teams finally get the forward-looking insights they need to manage growth proactively. And for the first time, analytics becomes genuinely strategic rather than just informational—because it directly enables better decisions about the activities that drive business success.

Wow, this is 7,500 you read so far - I had a lot of thoughts and I hope they were useful in some way.

Digital analytics as we knew it is over. The foundation it was built on—marketing attribution and the promise of data-driven decision making—has crumbled. GA4's disaster accelerated the collapse, but the underlying problems run much deeper.

Two directions are emerging from the wreckage:

Customer Experience Optimization represents the operational future. Tools that enable immediate action rather than deep analysis. AI agents that suggest specific improvements rather than generate reports. Systems built for marketing teams who need speed, not sophistication.

Revenue Intelligence represents the strategic future. Analytics that finally connects user behavior to business outcomes. Data warehouse approaches that combine behavioral patterns with subscription data, marketing attribution, and business metrics to predict and prevent revenue problems before they happen.

Both solve different pieces of the original promise that digital analytics never delivered. Neither looks much like the analytics we've known for the past 20 years.

The era of collecting data and hoping it's useful is ending. What's beginning is the era of systems that either enable immediate operational improvements or directly predict business outcomes. Everything else is just data theater.

Which brings us back to Amplitude where this post started.

This is my opinion (without having any clue about their strategy). Clinging on the old marketing analytics past, will not get them into the future. Yes, it can be an entry to marketing teams, that cling to GA, to have them let go and start with Amplitude.

Their real future is in the operational customer experience space. They have all the ingredients, now they need to cook. And with that in mind, I can't understand why they pick a person from the "old" analytics world to spread the words. They had it with Adam Greco and he moved on to a modern CDP approach.

But Amplitude always played the game on different levels at the same time. So they might do this here is well. We will see.

Interestingly, everyone I worked closely with at Amplitude has now moved on. This can tell something, or nothing.

Revenue intelligence will be no opportunity for them. They tried to push the warehouse integration. But, I guess, most companies don't have the data setup to go that way. And to be honest, Amplitude is not flexible enough as a tool to support this use case.

But that is my view and obviously very opinionated. What are your thoughts? Connect with me on LinkedIn and write me your opinion as direct message.

Or join Juliana and my new sanctuary for all minds in analytics and growth that love to call out BS and really want to do stuff that works and makes an impact: ALT+ -> we have thoughtful discussions like this one and we run monthly deep dive cohorts to learn together about fundamental and new concepts in growth, strategy and operations.

Head over to https://goalt.plus and join the waitlist - we are opening up by the end of September 2025. And we have limit the initial members to 50 in the first month.

Join the newsletter

Get bi-weekly insights on analytics, event data, and metric frameworks.